Identifying Edible Plants

The Intent of this final project is to develop an image recognition program that can accurately identify edible and/or poisonous plants in the wild. This endeavor has been attempted by several apps and other programs - however all of these realize an edge architecture that relies on a remote server connection in order to upload the file and run through the model.

This paper explores the difference performance options in order to arrive at the best performing model. We then work to reduce the model size in order to fit on an edge device for real time diagnosis.

In order to get a baseline model for image recognition, we used a transfer learning technique where the model weights and architecture of ResNet50 was applied. ResNet50 was chosen for its performance as well as its size. Training on the volume of images for the duration that ResNet50 was done would not be reasonable - therefore we have used this baseline model to improve the baseline prediction. On top of this we explore different model architectures in order to define which architecture performs the best.

In order to get the best performing model we needed to remember to balance model performance with edge device performance. In the case of poisonous plants the consequences of a bad prediction can be high - however the utility of an app that takes 60 min to make a prediction is impacted. Therefore at the end of this notebook we examine the relationship with building the model on a virtual machine (for training) vs inference on the edge device (time to predict vs accuracy). The overall training and model structure is defined below - the larger resources consuming actions will be conducted in the cloud enabling inference at the edge - which provides an advantage to prior work as no active network is required for inference:

| |

This paper can be broken down into the following sections: 1. Exploratory Data Analysis & Understanding of the Training Dataset 2. Image Augmentation - impacts of different augmentation techniques and best performing augmentation 3. Discussion of Base Model choice 4. Model Architecture 5. Image Classification on VM - Model Peformance 6. Binary Classification on VM - Model Performance 7. Model Transfer to Edge Device and discussion of performance vs inference time & resource constraints 8. Conclusion

We begin by examining the training dataset:

from matplotlib.pyplot import imread, imshow, subplots, show

image = imread("ModelStructure.jpg")

images = image.reshape((1, image.shape[0], image.shape[1], image.shape[2]))

imshow(images[0])

show()

Section 1: Exploratory Data Analysis

Our traning datasets were downloaded from Kaggle [https://www.kaggle.com/gverzea/edible-wild-plants, https://www.kaggle.com/nitron/poisonous-plants-images]. The datasets are comprised of: - Total of 6962 pictures: - 6552 of these pictures are of edible wild plants - 410 pictures are of poisonous plants. - There are: - 62 categories of edible plants - 8 categories of poisonous plants

From this data it is evident that our dataset is skewed (more edible pictures and categories than poisonous). Additionally, the dataset does not comprise all wild plants - and is only a rather small subset of wild plants. We will treat this dataset as our primary for training purposes. We do have two other datasets that can be utlized for further training on a larger array of edible plant types. The average number of images per category is 99 - however we can see in the plot below that the mean count of image per category is closer to 50 with some categories having a large number of images.

| |

Having 50 images per class does indicate that there is hopefully some variability in terms of image orientation, quality, etc. Having this variety will have a beneficial impact on the training process. We can further improve the variety of images by utilizing image augmentation.

In addition to increasing image variety, augmentation also helps to increase the trianing dataset size. Because we have a limited dataset (considering we will be training on Dense, CNN or ReLu layers) we can utilize augmentation and increase both the variety and total number of images in order to improve our trianing process. Furthermore, In the latter portion of our analysis, we attempt to make a prediction of poisonous or edible (rather than plant category). Because we have a biased training set for the binary label problem - we will increase image count of the poisonous images by utilizing image augmentation - explained in the next section.

Depicted below are a few sample images taken from the datasets.

|

|

|

Section 2: Image Augmentation

With the baseline model established, we understand that there will likely be a difference in the cleanliness of the images taken for the training dataset, vs the images taken in the field when a user wants to succesfully identify a plant. We therefore utilize image augmentation, to achieve a couple of tasks:

- Affect the image quality, orientation, etc in order to make the model more versatile

- Create more training images in order to train the model.

Data augmentation encompasses a wide range of techniques used to generate new training samples from the original ones by randomly transforming the original image via a series of random translations, rotations, changing brightness levels of the image etc. Keras deep learning library provides the capability to use data augmentation when training a model.

Image data augmentation is supported in the Keras via the ImageDataGenerator class. This class generates batches of tensor image data with real-time data augmentation. The ImageDataGenerator class is instantiated and the configuration for different types of data augmentation are passed in the form of arguments to the class constructor.

ImageDataGenerator supports a range of data augmentation techniques as well as pixel scaling methods. We have focused on the below techniques for our dataset

- Width_shift_range and height_shift_range arguments - Image shifts horizontally and vertically

- horizontal_flip and vertical_flip arguments- Image flips horizontally and vertically

- rotation_range argument - Image rotates by specified degrees

- brightness_range argument - Image brightness levels are modified

- zoom_range argument - Image zoom levels are altered

Here's the original image:

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from matplotlib.pyplot import imread, imshow, subplots, show

def plot_images(image_augmentor):

image_augmentor.fit(images)

augmented_images = image_augmentor.flow(images)

fig, rows = subplots(nrows=1, ncols=5, figsize=(18,18))

for row in rows:

row.imshow(augmented_images.next()[0].astype('int'))

row.axis('off')

show()

image = imread("asparagus2.jpg")

# Show the original image

images = image.reshape((1, image.shape[0], image.shape[1], image.shape[2]))

imshow(images[0])

show()

|

Examples of images after Data Augmentation

Horizontal and Vertical Shift Augmentation

The ImageDataGenerator constructor control the amount of horizontal and vertical shift using the arguments The width_shift_range and height_shift_range respectively, a number of pixels can be specified to shift the image.

A shift to an image means moving all pixels of the image in one direction, either horizontally or vertically, while keeping the image dimensions the same. The shift clips off some of the pixels from the image and there will be a region of the image where empty pixel values will have to be specified, by default the closest pixel value is chosen and repeated for all the empty values.

Horizontal Shift Augmentation

image_augmentor = ImageDataGenerator(width_shift_range=0.5)

plot_images(image_augmentor)

|

Vertical Shift Augmentation

image_augmentor = ImageDataGenerator(height_shift_range=0.3)

plot_images(image_augmentor)

|

Horizontal and Vertical Flip Augmentation

An image flip means reversing the rows or columns of pixels in the case of a vertical or horizontal flip respectively.

The flip augmentation is specified by a boolean horizontal_flip or vertical_flip argument to the ImageDataGenerator class constructor.

Horizontal Flip Augmentation

image_augmentor = ImageDataGenerator(horizontal_flip=True)

plot_images(image_augmentor)

|

Vertical Flip Augmentation

image_augmentor = ImageDataGenerator(vertical_flip=True)

plot_images(image_augmentor)

|

Rotation Augmentation

A rotation augmentation randomly rotates the image clockwise by a specified number of degrees from 0 to 360. The rotation will likely rotate pixels out of the image frame and leave areas of the frame with no pixel data that must be filled in, by default the closest pixel value is chosen and repeated for all the empty values.

image_augmentor = ImageDataGenerator(rotation_range=90)

plot_images(image_augmentor)

|

Brightness Augmentation

The brightness of the image can be augmented by either randomly changing the brightness levels of the image. generated images could be dark or light or both.

This helps the model to generalize across images captured in different lighting levels. brightness_range argument is passed to the ImageDataGenerator() constructor that specifies min and max range as a floating point number representing the percentage for selecting the brightness levels. 1.0 has no effect on brightness, Values less than 1.0 darken the image, e.g. [0.5, 1.0], whereas values larger than 1.0 brighten the image, e.g. [1.0, 1.5].

image_augmentor = ImageDataGenerator(brightness_range=(0.2, 1.5))

plot_images(image_augmentor)

|

Zoom Augmentation

Zoom augmentation randomly zooms the image in and out, either adds new pixel values around the image or interpolates pixel values respectively.

zoom_range argument is passed to the ImageDataGenerator constructor to enable Image zooming. You can specify the percentage of the zoom as a single float or a range.

If a float is specified, then the range for the zoom will be [1-value, 1+value]. For example, if you specify 0.3, then the range will be [0.7, 1.3], or between 70% (zoom in) and 130% (zoom out).

As seen below, random zoom in is different on both the width and height dimensions as well as the aspect ratio of the object in the image.

image_augmentor = ImageDataGenerator(zoom_range=[0.5, 1.5])

plot_images(image_augmentor)

|

Data augmentation makes the model more robust to slight variations, and hence prevents the model from overfitting.

Below we explore different effects of image augmentation and show below the effects of model performance:

Section 3: Base Model - ResNet Model Choice

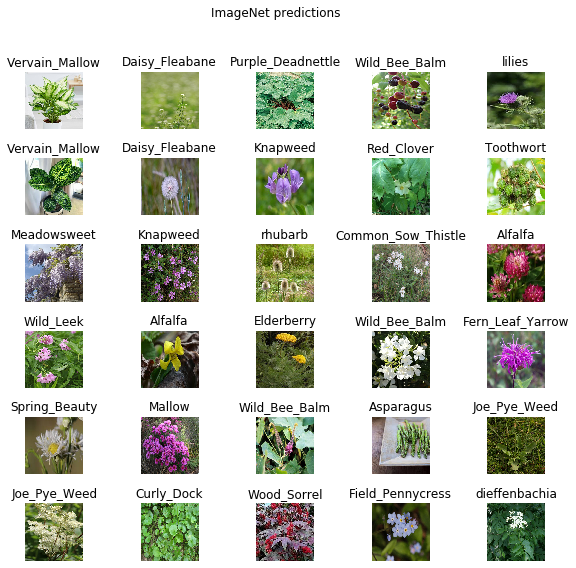

With the problem of image classification we understood that utilizing transfer learning would speed the training process. We chose to utilize the ResNet50 model due to its: - Accuracy proven on the ImageNet dataset - Overall size (50 ReLu layers)

The ResNet50 dataset was trained on the ImageNet dataset which is a large volume dataset with classes associated with common everyday items (broccoli, horse, etc). Reviewing the categories the model has already been trained on, it currently doesn't have any that match the classes in our training dataset - however it has been trained on many plant and food related items.

ResNet is a model built upon the Residual layer structure. It has been noted in literature where deeper networks tend to have lower accuracy compared to it's shallower counterpart. This is essentially because it can be hard for a dense layer to learn the y = x relationship when the training has become saturated. A Residual Layer has an impliminatation similar to y = F(x) + x where the function F(x) can reduce to 0 and bypass the degradation problem.

| |

Picture above is one layer of a residual layer which shows how the input can bypass the learned weights and become the output. This type of layer has shown high accuracy in image classification problems. He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep Residual Leraning for Image Recognition. Cornell University.

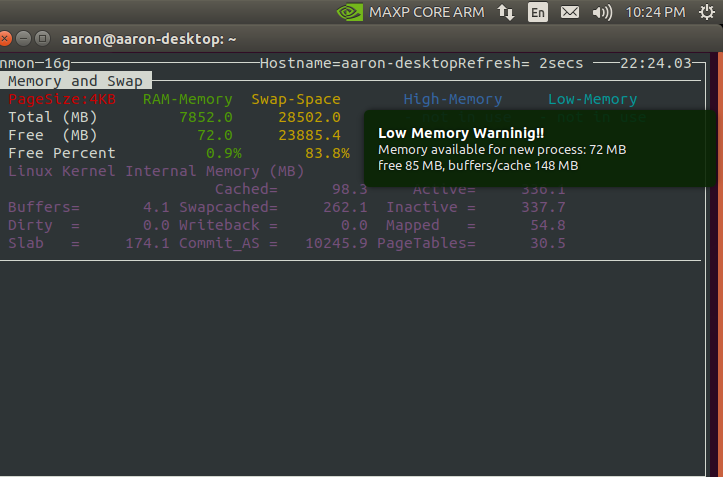

In addition to a well trained model we also needed to control the size and the inference time of the model. The intent of this paper is to train in a data center (where volume of data and computing power can be large) in order to bring the model to an edge device for inference. We therefore need to ensure the model is sufficiently small (such that the memory requirements won't exhaust the hardware of an edge device), fit on a limited storage device, and provide image inference at a reasonable time. Because of this constraint we chose to use ResNet50 as our baselayer vs ResNet 100, 1000 for example.

# Load ResNet50 baseline model

from tensorflow.keras.applications.resnet50 import ResNet50, preprocess_input

HEIGHT = 300

WIDTH = 300

base_model = ResNet50(weights='imagenet',

include_top=False,

input_shape=(HEIGHT, WIDTH, 3))

Image Classification Model Analysis

import os

from glob import glob

IMAGECLASS_TRAIN_DIR = "train"

IMAGECLASS_VALIDATION_DIR = "validation"

IMAGECLASS_TEST_DIR = "test"

result = [y for x in os.walk(IMAGECLASS_TRAIN_DIR) for y in glob(os.path.join(x[0], '*.jpg'))]

classes = list(set([y.split("/")[-2] for y in result]))

HEIGHT = 300

WIDTH = 300

BATCH_SIZE = 32

Train Data Generator

# Image augmentation

from tensorflow.keras.preprocessing.image import ImageDataGenerator

imageClass_train_datagen = ImageDataGenerator(

preprocessing_function=preprocess_input,

rescale=1./255,

width_shift_range=0.2,

height_shift_range=0.2,

horizontal_flip=True,

vertical_flip=True,

rotation_range=90,

zoom_range=[0.5, 1.5],

brightness_range=(0.1, 0.9)

)

imageClass_train_generator = imageClass_train_datagen.flow_from_directory(IMAGECLASS_TRAIN_DIR,

target_size=(HEIGHT, WIDTH),

batch_size=BATCH_SIZE,

class_mode='categorical')

Found 8000 images belonging to 70 classes.

Validation data generator

Generally, only apply data augmentation to the training examples. In this case, only rescale the validation images and convert them into batches using ImageDataGenerator.

imageClass_val_datagen = ImageDataGenerator(rescale=1./255)

imageClass_validation_generator = imageClass_val_datagen.flow_from_directory(batch_size=BATCH_SIZE,

directory=IMAGECLASS_VALIDATION_DIR,

target_size=(HEIGHT, WIDTH),

class_mode='categorical')

Found 479 images belonging to 70 classes.

Section 4: Model Architecture - Predicting Plant Class

Building on the baseline model, we have explored adding different layers (size, type, etc) in order to produce the 'best' performing model. See above and later discussions as to how we define 'best' model. For this paper we explored both accurately predicting the class of the plant in an image - which would help users understand more information about the specific plant they have taken the picture of, as well as predicting whether a plant is poisonous or not without the context of exact plant type.

We first explored keeping all the base model layers static (no change to the model weights) and only training on the additional layers - we explore the following layer architecture: - Dense layers (different nodes and depths) - CNN with pooling layers given the image classification problem - Residual layer based on our chosen base model architecture

In order to narrow model choice we chose to train for 100 epochs on 15,000 images with a batch size of 64. Our specific trials are captured and analyzed below. Below we show the code that was used during model training (both for image classification as well as binary classification (poison yes/no)). Below the model architecture we detail performance of varying model architectures.

base_model.output.shape

TensorShape([Dimension(None), Dimension(10), Dimension(10), Dimension(2048)])

from tensorflow.keras.layers import Dense, Activation, Flatten, Dropout, Conv2D, MaxPooling2D

from tensorflow.keras.models import Sequential, Model

def build_finetune_model(base_model, dropout, fc_layers, num_classes):

for layer in base_model.layers:

layer.trainable = True

x = base_model.output

# x = Conv2D(32, kernel_size=(3, 3),

# activation='relu',

# input_shape=base_model.output.shape)(x)

# #x = Conv2D(64, (3, 3), activation='relu')(x)

# x = MaxPooling2D(pool_size=(2, 2))(x)

# x = Dropout(dropout)(x)

# #x.add(Flatten())

# Add CNN

# x = Conv2D(32, kernel_size=(3, 3),

# activation='relu')(x)

# x = Conv2D(64, (3, 3), activation='relu')(x)

# x = MaxPooling2D(pool_size=(2, 2))(x)

# Add Residual Layers

# conv1 = Conv2D(64, (3,3), padding = 'same', activation = 'relu', kernel_initializer='he_normal')(x)

# conv2 = Conv2D(2048,(3,3), padding = 'same', activation = 'linear', kernel_initializer='he_normal')(conv1)

# layer_out = add([conv2, x])

# x = Activation('relu')(layer_out)

# conv1 = Conv2D(64, (3,3), padding = 'same', activation = 'relu', kernel_initializer='he_normal')(x)

# conv2 = Conv2D(2048,(3,3), padding = 'same', activation = 'linear', kernel_initializer='he_normal')(conv1)

# layer_out = add([conv2, x])

# x = Activation('relu')(layer_out)

# conv1 = Conv2D(64, (3,3), padding = 'same', activation = 'relu', kernel_initializer='he_normal')(x)

# conv2 = Conv2D(2048,(3,3), padding = 'same', activation = 'linear', kernel_initializer='he_normal')(conv1)

# layer_out = add([conv2, x])

# x = Activation('relu')(layer_out)

# conv1 = Conv2D(64, (3,3), padding = 'same', activation = 'relu', kernel_initializer='he_normal')(x)

# conv2 = Conv2D(2048,(3,3), padding = 'same', activation = 'linear', kernel_initializer='he_normal')(conv1)

# layer_out = add([conv2, x])

# x = Activation('relu')(layer_out)

# conv1 = Conv2D(64, (3,3), padding = 'same', activation = 'relu', kernel_initializer='he_normal')(x)

# conv2 = Conv2D(2048,(3,3), padding = 'same', activation = 'linear', kernel_initializer='he_normal')(conv1)

# layer_out = add([conv2, x])

# x = Activation('relu')(layer_out)

x = Flatten()(x)

for fc in fc_layers:

# Can look here if adding different types of layers has an effect

# Also explore differences in changing activation function

# Can also iterate on droupout amount

x = Dense(fc, activation='relu')(x)

x = Dropout(dropout)(x)

# New softmax layer

predictions = Dense(num_classes, activation='softmax')(x)

finetune_model = Model(inputs=base_model.input, outputs=predictions)

return finetune_model

# Can change the model architecture here

FC_LAYERS = [128,32]

dropout = 0.3

imageClass_finetune_model = build_finetune_model(base_model,

dropout=dropout,

fc_layers=FC_LAYERS,

num_classes=len(classes))

WARNING:tensorflow:From /opt/conda/lib/python3.6/site-packages/tensorflow/python/keras/layers/core.py:143: calling dropout (from tensorflow.python.ops.nn_ops) with keep_prob is deprecated and will be removed in a future version.

Instructions for updating:

Please use `rate` instead of `keep_prob`. Rate should be set to `rate = 1 - keep_prob`.

from tensorflow.keras.optimizers import SGD, Adam

from tensorflow.keras.callbacks import ModelCheckpoint

import tensorflow as tf

import datetime

import numpy

# For the baseline model will use 100 epochs and 15000 images to test model performance

# Will then use 'optimized' model parameters to train for longer time and explore

# Size vs Accuracy for edge compute purposes

NUM_EPOCHS = 100

BATCH_SIZE = 64

num_train_images = 15000

num_val_images = 479

adam = Adam(lr=0.00001)

# Can look into whether

#finetune_model.compile(adam, loss='categorical_crossentropy', metrics=['accuracy'])

imageClass_finetune_model.compile(adam, loss='categorical_crossentropy', metrics=['categorical_accuracy'])

# Checkpoin is overwritten at each epoch - can look at line below where datetime is used to create time based file names

filepath="/root/w251_finalproject/checkpoint/model"

checkpoint = ModelCheckpoint(filepath, monitor=["categorical_accuracy"], verbose=1, mode='max')

# history = finetune_model.fit_generator(train_generator, epochs=NUM_EPOCHS,

# steps_per_epoch=num_train_images // BATCH_SIZE,

# shuffle=True)# , callbacks=[checkpoint])

imageClass_history = imageClass_finetune_model.fit_generator(

imageClass_train_generator,

steps_per_epoch= num_train_images // BATCH_SIZE,

epochs=NUM_EPOCHS,

validation_data=imageClass_validation_generator,

validation_steps= num_val_images // BATCH_SIZE

)

WARNING:tensorflow:From /opt/conda/lib/python3.6/site-packages/tensorflow/python/ops/math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.cast instead.

Epoch 1/100

15/15 [==============================] - 16s 1s/step - loss: 4.6113 - categorical_accuracy: 0.0021

250/250 [==============================] - 275s 1s/step - loss: 4.0240 - categorical_accuracy: 0.0878 - val_loss: 4.6113 - val_categorical_accuracy: 0.0021

Epoch 2/100

15/15 [==============================] - 14s 917ms/step - loss: 4.4534 - categorical_accuracy: 0.0146

250/250 [==============================] - 257s 1s/step - loss: 3.5324 - categorical_accuracy: 0.1896 - val_loss: 4.4534 - val_categorical_accuracy: 0.0146

Epoch 3/100

15/15 [==============================] - 15s 971ms/step - loss: 4.0701 - categorical_accuracy: 0.1065

250/250 [==============================] - 259s 1s/step - loss: 3.3367 - categorical_accuracy: 0.2323 - val_loss: 4.0701 - val_categorical_accuracy: 0.1065

Epoch 4/100

15/15 [==============================] - 15s 967ms/step - loss: 3.9508 - categorical_accuracy: 0.1211

250/250 [==============================] - 255s 1s/step - loss: 3.2017 - categorical_accuracy: 0.2606 - val_loss: 3.9508 - val_categorical_accuracy: 0.1211

Epoch 5/100

15/15 [==============================] - 14s 965ms/step - loss: 4.0411 - categorical_accuracy: 0.1148

250/250 [==============================] - 255s 1s/step - loss: 3.1179 - categorical_accuracy: 0.2804 - val_loss: 4.0411 - val_categorical_accuracy: 0.1148

Epoch 6/100

15/15 [==============================] - 14s 946ms/step - loss: 4.2681 - categorical_accuracy: 0.1065

250/250 [==============================] - 255s 1s/step - loss: 2.9306 - categorical_accuracy: 0.3212 - val_loss: 4.2681 - val_categorical_accuracy: 0.1065

Epoch 8/100

15/15 [==============================] - 15s 968ms/step - loss: 3.9663 - categorical_accuracy: 0.1378

250/250 [==============================] - 256s 1s/step - loss: 2.8664 - categorical_accuracy: 0.3361 - val_loss: 3.9663 - val_categorical_accuracy: 0.1378

Epoch 9/100

15/15 [==============================] - 14s 964ms/step - loss: 3.9804 - categorical_accuracy: 0.1608

250/250 [==============================] - 256s 1s/step - loss: 2.7547 - categorical_accuracy: 0.3501 - val_loss: 3.9804 - val_categorical_accuracy: 0.1608

Epoch 10/100

15/15 [==============================] - 15s 1s/step - loss: 3.7974 - categorical_accuracy: 0.1670

250/250 [==============================] - 258s 1s/step - loss: 2.6922 - categorical_accuracy: 0.3721 - val_loss: 3.7974 - val_categorical_accuracy: 0.1670

Epoch 11/100

15/15 [==============================] - 14s 921ms/step - loss: 3.8230 - categorical_accuracy: 0.1816

250/250 [==============================] - 255s 1s/step - loss: 2.6142 - categorical_accuracy: 0.3760 - val_loss: 3.8230 - val_categorical_accuracy: 0.1816

Epoch 12/100

15/15 [==============================] - 15s 967ms/step - loss: 3.7753 - categorical_accuracy: 0.1858

250/250 [==============================] - 257s 1s/step - loss: 2.5413 - categorical_accuracy: 0.3975 - val_loss: 3.7753 - val_categorical_accuracy: 0.1858

Epoch 13/100

15/15 [==============================] - 14s 965ms/step - loss: 3.6889 - categorical_accuracy: 0.1921

250/250 [==============================] - 258s 1s/step - loss: 2.4730 - categorical_accuracy: 0.4126 - val_loss: 3.6889 - val_categorical_accuracy: 0.1921

Epoch 14/100

15/15 [==============================] - 14s 939ms/step - loss: 3.6455 - categorical_accuracy: 0.2276

250/250 [==============================] - 255s 1s/step - loss: 2.3795 - categorical_accuracy: 0.4391 - val_loss: 3.6455 - val_categorical_accuracy: 0.2276

Epoch 15/100

15/15 [==============================] - 14s 965ms/step - loss: 3.6641 - categorical_accuracy: 0.2234

250/250 [==============================] - 257s 1s/step - loss: 2.3306 - categorical_accuracy: 0.4415 - val_loss: 3.6641 - val_categorical_accuracy: 0.2234

Epoch 16/100

15/15 [==============================] - 14s 948ms/step - loss: 3.7792 - categorical_accuracy: 0.1942

250/250 [==============================] - 257s 1s/step - loss: 2.2660 - categorical_accuracy: 0.4557 - val_loss: 3.7792 - val_categorical_accuracy: 0.1942

Epoch 17/100

15/15 [==============================] - 14s 941ms/step - loss: 3.6185 - categorical_accuracy: 0.2589

250/250 [==============================] - 257s 1s/step - loss: 2.2083 - categorical_accuracy: 0.4675 - val_loss: 3.6185 - val_categorical_accuracy: 0.2589

Epoch 18/100

15/15 [==============================] - 15s 967ms/step - loss: 3.6167 - categorical_accuracy: 0.2463

250/250 [==============================] - 258s 1s/step - loss: 2.1713 - categorical_accuracy: 0.4840 - val_loss: 3.6167 - val_categorical_accuracy: 0.2463

Epoch 19/100

15/15 [==============================] - 15s 993ms/step - loss: 3.5697 - categorical_accuracy: 0.2401

250/250 [==============================] - 256s 1s/step - loss: 2.1389 - categorical_accuracy: 0.4877 - val_loss: 3.5697 - val_categorical_accuracy: 0.2401

Epoch 20/100

15/15 [==============================] - 15s 1s/step - loss: 3.5496 - categorical_accuracy: 0.2568

250/250 [==============================] - 258s 1s/step - loss: 2.0664 - categorical_accuracy: 0.5042 - val_loss: 3.5496 - val_categorical_accuracy: 0.2568

Epoch 21/100

15/15 [==============================] - 15s 1s/step - loss: 3.5511 - categorical_accuracy: 0.2484

250/250 [==============================] - 259s 1s/step - loss: 1.9967 - categorical_accuracy: 0.5138 - val_loss: 3.5511 - val_categorical_accuracy: 0.2484

Epoch 22/100

15/15 [==============================] - 15s 969ms/step - loss: 3.5397 - categorical_accuracy: 0.2484

250/250 [==============================] - 254s 1s/step - loss: 1.9682 - categorical_accuracy: 0.5229 - val_loss: 3.5397 - val_categorical_accuracy: 0.2484

Epoch 23/100

15/15 [==============================] - 15s 981ms/step - loss: 3.5904 - categorical_accuracy: 0.2025

250/250 [==============================] - 253s 1s/step - loss: 1.9404 - categorical_accuracy: 0.5297 - val_loss: 3.5904 - val_categorical_accuracy: 0.2025

Epoch 24/100

15/15 [==============================] - 14s 950ms/step - loss: 3.5082 - categorical_accuracy: 0.2881

250/250 [==============================] - 257s 1s/step - loss: 1.8630 - categorical_accuracy: 0.5409 - val_loss: 3.5082 - val_categorical_accuracy: 0.2881

Epoch 25/100

15/15 [==============================] - 14s 924ms/step - loss: 3.5616 - categorical_accuracy: 0.2317

250/250 [==============================] - 256s 1s/step - loss: 1.8223 - categorical_accuracy: 0.5524 - val_loss: 3.5616 - val_categorical_accuracy: 0.2317

Epoch 26/100

15/15 [==============================] - 14s 913ms/step - loss: 3.5328 - categorical_accuracy: 0.2672

250/250 [==============================] - 256s 1s/step - loss: 1.7936 - categorical_accuracy: 0.5583 - val_loss: 3.5328 - val_categorical_accuracy: 0.2672

Epoch 27/100

15/15 [==============================] - 13s 888ms/step - loss: 3.5321 - categorical_accuracy: 0.2672

250/250 [==============================] - 257s 1s/step - loss: 1.7634 - categorical_accuracy: 0.5666 - val_loss: 3.5321 - val_categorical_accuracy: 0.2672

Epoch 28/100

15/15 [==============================] - 14s 966ms/step - loss: 3.5500 - categorical_accuracy: 0.2777

250/250 [==============================] - 256s 1s/step - loss: 1.7230 - categorical_accuracy: 0.5836 - val_loss: 3.5500 - val_categorical_accuracy: 0.2777

Epoch 29/100

15/15 [==============================] - 14s 906ms/step - loss: 3.4617 - categorical_accuracy: 0.2818

250/250 [==============================] - 255s 1s/step - loss: 1.6478 - categorical_accuracy: 0.5955 - val_loss: 3.4617 - val_categorical_accuracy: 0.2818

Epoch 30/100

15/15 [==============================] - 15s 986ms/step - loss: 3.6146 - categorical_accuracy: 0.2651

250/250 [==============================] - 262s 1s/step - loss: 1.6235 - categorical_accuracy: 0.6016 - val_loss: 3.6146 - val_categorical_accuracy: 0.2651

Epoch 31/100

15/15 [==============================] - 15s 997ms/step - loss: 3.4886 - categorical_accuracy: 0.3027

250/250 [==============================] - 261s 1s/step - loss: 1.5974 - categorical_accuracy: 0.6085 - val_loss: 3.4886 - val_categorical_accuracy: 0.3027

Epoch 32/100

15/15 [==============================] - 15s 968ms/step - loss: 3.5459 - categorical_accuracy: 0.2839

250/250 [==============================] - 259s 1s/step - loss: 1.5677 - categorical_accuracy: 0.6140 - val_loss: 3.5459 - val_categorical_accuracy: 0.2839

Epoch 33/100

15/15 [==============================] - 15s 983ms/step - loss: 3.5736 - categorical_accuracy: 0.3006

250/250 [==============================] - 259s 1s/step - loss: 1.5307 - categorical_accuracy: 0.6216 - val_loss: 3.5736 - val_categorical_accuracy: 0.3006

Epoch 34/100

15/15 [==============================] - 14s 908ms/step - loss: 3.4280 - categorical_accuracy: 0.3027

250/250 [==============================] - 257s 1s/step - loss: 1.4952 - categorical_accuracy: 0.6324 - val_loss: 3.4280 - val_categorical_accuracy: 0.3027

Epoch 35/100

15/15 [==============================] - 15s 998ms/step - loss: 3.4712 - categorical_accuracy: 0.2881

250/250 [==============================] - 256s 1s/step - loss: 1.4786 - categorical_accuracy: 0.6285 - val_loss: 3.4712 - val_categorical_accuracy: 0.2881

Epoch 36/100

15/15 [==============================] - 15s 1s/step - loss: 3.4030 - categorical_accuracy: 0.2965

250/250 [==============================] - 259s 1s/step - loss: 1.4276 - categorical_accuracy: 0.6444 - val_loss: 3.4030 - val_categorical_accuracy: 0.2965

Epoch 37/100

15/15 [==============================] - 14s 952ms/step - loss: 3.5726 - categorical_accuracy: 0.2944

250/250 [==============================] - 256s 1s/step - loss: 1.3896 - categorical_accuracy: 0.6544 - val_loss: 3.5726 - val_categorical_accuracy: 0.2944

Epoch 38/100

15/15 [==============================] - 14s 956ms/step - loss: 3.4549 - categorical_accuracy: 0.3027

250/250 [==============================] - 257s 1s/step - loss: 1.3655 - categorical_accuracy: 0.6545 - val_loss: 3.4549 - val_categorical_accuracy: 0.3027

Epoch 39/100

15/15 [==============================] - 14s 943ms/step - loss: 3.5666 - categorical_accuracy: 0.3173

250/250 [==============================] - 256s 1s/step - loss: 1.3410 - categorical_accuracy: 0.6658 - val_loss: 3.5666 - val_categorical_accuracy: 0.3173

Epoch 40/100

15/15 [==============================] - 14s 966ms/step - loss: 3.6170 - categorical_accuracy: 0.3048

250/250 [==============================] - 257s 1s/step - loss: 1.3112 - categorical_accuracy: 0.6714 - val_loss: 3.6170 - val_categorical_accuracy: 0.3048

Epoch 41/100

15/15 [==============================] - 15s 1s/step - loss: 3.5953 - categorical_accuracy: 0.3319

250/250 [==============================] - 260s 1s/step - loss: 1.2718 - categorical_accuracy: 0.6812 - val_loss: 3.5953 - val_categorical_accuracy: 0.3319

Epoch 42/100

15/15 [==============================] - 14s 931ms/step - loss: 3.5098 - categorical_accuracy: 0.3194

250/250 [==============================] - 258s 1s/step - loss: 1.2447 - categorical_accuracy: 0.6844 - val_loss: 3.5098 - val_categorical_accuracy: 0.3194

Epoch 43/100

15/15 [==============================] - 15s 971ms/step - loss: 3.6556 - categorical_accuracy: 0.3152

250/250 [==============================] - 259s 1s/step - loss: 1.2331 - categorical_accuracy: 0.6902 - val_loss: 3.6556 - val_categorical_accuracy: 0.3152

Epoch 44/100

15/15 [==============================] - 15s 1000ms/step - loss: 3.5623 - categorical_accuracy: 0.3215

250/250 [==============================] - 257s 1s/step - loss: 1.2130 - categorical_accuracy: 0.6959 - val_loss: 3.5623 - val_categorical_accuracy: 0.3215

Epoch 45/100

15/15 [==============================] - 15s 978ms/step - loss: 3.6266 - categorical_accuracy: 0.3215

250/250 [==============================] - 256s 1s/step - loss: 1.2125 - categorical_accuracy: 0.6986 - val_loss: 3.6266 - val_categorical_accuracy: 0.3215

Epoch 46/100

15/15 [==============================] - 15s 1s/step - loss: 3.6584 - categorical_accuracy: 0.3215

250/250 [==============================] - 258s 1s/step - loss: 1.1844 - categorical_accuracy: 0.6992 - val_loss: 3.6584 - val_categorical_accuracy: 0.3215

Epoch 47/100

15/15 [==============================] - 14s 937ms/step - loss: 3.5813 - categorical_accuracy: 0.2881

250/250 [==============================] - 256s 1s/step - loss: 1.1422 - categorical_accuracy: 0.7136 - val_loss: 3.5813 - val_categorical_accuracy: 0.2881

Epoch 48/100

15/15 [==============================] - 15s 985ms/step - loss: 3.8531 - categorical_accuracy: 0.2797

250/250 [==============================] - 259s 1s/step - loss: 1.1035 - categorical_accuracy: 0.7182 - val_loss: 3.8531 - val_categorical_accuracy: 0.2797

Epoch 49/100

15/15 [==============================] - 13s 899ms/step - loss: 3.6830 - categorical_accuracy: 0.3111

250/250 [==============================] - 254s 1s/step - loss: 1.0691 - categorical_accuracy: 0.7261 - val_loss: 3.6830 - val_categorical_accuracy: 0.3111

Epoch 50/100

15/15 [==============================] - 14s 918ms/step - loss: 3.6863 - categorical_accuracy: 0.3340

250/250 [==============================] - 258s 1s/step - loss: 1.0809 - categorical_accuracy: 0.7249 - val_loss: 3.6863 - val_categorical_accuracy: 0.3340

Epoch 51/100

15/15 [==============================] - 14s 937ms/step - loss: 3.7512 - categorical_accuracy: 0.3486

250/250 [==============================] - 259s 1s/step - loss: 1.0247 - categorical_accuracy: 0.7364 - val_loss: 3.7512 - val_categorical_accuracy: 0.3486

Epoch 52/100

15/15 [==============================] - 14s 913ms/step - loss: 3.9033 - categorical_accuracy: 0.3215

250/250 [==============================] - 261s 1s/step - loss: 0.9978 - categorical_accuracy: 0.7473 - val_loss: 3.9033 - val_categorical_accuracy: 0.3215

Epoch 54/100

15/15 [==============================] - 15s 1s/step - loss: 3.7908 - categorical_accuracy: 0.3299

250/250 [==============================] - 260s 1s/step - loss: 0.9881 - categorical_accuracy: 0.7496 - val_loss: 3.7908 - val_categorical_accuracy: 0.3299

Epoch 55/100

15/15 [==============================] - 14s 966ms/step - loss: 4.0360 - categorical_accuracy: 0.3466

250/250 [==============================] - 262s 1s/step - loss: 0.9534 - categorical_accuracy: 0.7566 - val_loss: 4.0360 - val_categorical_accuracy: 0.3466

Epoch 56/100

15/15 [==============================] - 14s 930ms/step - loss: 3.8476 - categorical_accuracy: 0.3507

250/250 [==============================] - 256s 1s/step - loss: 0.9511 - categorical_accuracy: 0.7573 - val_loss: 3.8476 - val_categorical_accuracy: 0.3507

Epoch 57/100

15/15 [==============================] - 14s 958ms/step - loss: 3.9393 - categorical_accuracy: 0.3299

250/250 [==============================] - 257s 1s/step - loss: 0.9410 - categorical_accuracy: 0.7561 - val_loss: 3.9393 - val_categorical_accuracy: 0.3299

Epoch 58/100

15/15 [==============================] - 15s 985ms/step - loss: 3.7561 - categorical_accuracy: 0.3633

250/250 [==============================] - 256s 1s/step - loss: 0.8995 - categorical_accuracy: 0.7704 - val_loss: 3.7561 - val_categorical_accuracy: 0.3633

Epoch 59/100

15/15 [==============================] - 14s 937ms/step - loss: 3.8762 - categorical_accuracy: 0.3194

250/250 [==============================] - 258s 1s/step - loss: 0.8891 - categorical_accuracy: 0.7661 - val_loss: 3.8762 - val_categorical_accuracy: 0.3194

Epoch 60/100

15/15 [==============================] - 15s 970ms/step - loss: 3.8328 - categorical_accuracy: 0.3633

250/250 [==============================] - 257s 1s/step - loss: 0.8770 - categorical_accuracy: 0.7700 - val_loss: 3.8328 - val_categorical_accuracy: 0.3633

Epoch 61/100

15/15 [==============================] - 14s 945ms/step - loss: 3.9193 - categorical_accuracy: 0.3215

250/250 [==============================] - 257s 1s/step - loss: 0.8926 - categorical_accuracy: 0.7691 - val_loss: 3.9193 - val_categorical_accuracy: 0.3215

Epoch 62/100

15/15 [==============================] - 14s 940ms/step - loss: 3.8608 - categorical_accuracy: 0.3236

250/250 [==============================] - 261s 1s/step - loss: 0.8651 - categorical_accuracy: 0.7757 - val_loss: 3.8608 - val_categorical_accuracy: 0.3236

Epoch 64/100

15/15 [==============================] - 14s 963ms/step - loss: 3.8405 - categorical_accuracy: 0.3570

250/250 [==============================] - 256s 1s/step - loss: 0.8078 - categorical_accuracy: 0.7897 - val_loss: 3.8405 - val_categorical_accuracy: 0.3570

Epoch 65/100

15/15 [==============================] - 14s 923ms/step - loss: 3.8981 - categorical_accuracy: 0.3382

250/250 [==============================] - 259s 1s/step - loss: 0.8157 - categorical_accuracy: 0.7897 - val_loss: 3.8981 - val_categorical_accuracy: 0.3382

Epoch 66/100

15/15 [==============================] - 14s 933ms/step - loss: 3.8738 - categorical_accuracy: 0.3633

250/250 [==============================] - 256s 1s/step - loss: 0.7686 - categorical_accuracy: 0.7964 - val_loss: 3.8738 - val_categorical_accuracy: 0.3633

Epoch 67/100

15/15 [==============================] - 14s 952ms/step - loss: 3.9339 - categorical_accuracy: 0.3507

250/250 [==============================] - 259s 1s/step - loss: 0.7749 - categorical_accuracy: 0.7993 - val_loss: 3.9339 - val_categorical_accuracy: 0.3507

Epoch 68/100

15/15 [==============================] - 14s 946ms/step - loss: 3.9571 - categorical_accuracy: 0.3466

250/250 [==============================] - 257s 1s/step - loss: 0.7536 - categorical_accuracy: 0.8021 - val_loss: 3.9571 - val_categorical_accuracy: 0.3466

Epoch 69/100

15/15 [==============================] - 15s 969ms/step - loss: 3.8007 - categorical_accuracy: 0.3466

250/250 [==============================] - 255s 1s/step - loss: 0.7473 - categorical_accuracy: 0.8030 - val_loss: 3.8007 - val_categorical_accuracy: 0.3466

Epoch 70/100

15/15 [==============================] - 14s 952ms/step - loss: 3.9706 - categorical_accuracy: 0.3236

250/250 [==============================] - 258s 1s/step - loss: 0.7411 - categorical_accuracy: 0.8111 - val_loss: 3.9706 - val_categorical_accuracy: 0.3236

Epoch 71/100

15/15 [==============================] - 15s 992ms/step - loss: 4.0217 - categorical_accuracy: 0.3090

250/250 [==============================] - 260s 1s/step - loss: 0.7040 - categorical_accuracy: 0.8175 - val_loss: 4.0217 - val_categorical_accuracy: 0.3090

Epoch 73/100

15/15 [==============================] - 15s 984ms/step - loss: 4.2207 - categorical_accuracy: 0.3236

250/250 [==============================] - 260s 1s/step - loss: 0.7035 - categorical_accuracy: 0.8163 - val_loss: 4.2207 - val_categorical_accuracy: 0.3236

Epoch 74/100

15/15 [==============================] - 15s 1s/step - loss: 4.0939 - categorical_accuracy: 0.3424

250/250 [==============================] - 261s 1s/step - loss: 0.6778 - categorical_accuracy: 0.8236 - val_loss: 4.0939 - val_categorical_accuracy: 0.3424

Epoch 75/100

15/15 [==============================] - 14s 948ms/step - loss: 4.2614 - categorical_accuracy: 0.3048

250/250 [==============================] - 260s 1s/step - loss: 0.6627 - categorical_accuracy: 0.8261 - val_loss: 4.2614 - val_categorical_accuracy: 0.3048

Epoch 76/100

15/15 [==============================] - 14s 964ms/step - loss: 4.1499 - categorical_accuracy: 0.3466

250/250 [==============================] - 260s 1s/step - loss: 0.6598 - categorical_accuracy: 0.8281 - val_loss: 4.1499 - val_categorical_accuracy: 0.3466

Epoch 77/100

15/15 [==============================] - 14s 955ms/step - loss: 4.0080 - categorical_accuracy: 0.3612

250/250 [==============================] - 258s 1s/step - loss: 0.6407 - categorical_accuracy: 0.8349 - val_loss: 4.0080 - val_categorical_accuracy: 0.3612

Epoch 78/100

15/15 [==============================] - 15s 1s/step - loss: 3.9263 - categorical_accuracy: 0.3779

250/250 [==============================] - 260s 1s/step - loss: 0.6510 - categorical_accuracy: 0.8344 - val_loss: 3.9263 - val_categorical_accuracy: 0.3779

Epoch 79/100

15/15 [==============================] - 16s 1s/step - loss: 4.1673 - categorical_accuracy: 0.3570

250/250 [==============================] - 256s 1s/step - loss: 0.6200 - categorical_accuracy: 0.8320 - val_loss: 4.1673 - val_categorical_accuracy: 0.3570

Epoch 80/100

15/15 [==============================] - 14s 924ms/step - loss: 3.9761 - categorical_accuracy: 0.3841

250/250 [==============================] - 254s 1s/step - loss: 0.6182 - categorical_accuracy: 0.8349 - val_loss: 3.9761 - val_categorical_accuracy: 0.3841

Epoch 81/100

15/15 [==============================] - 14s 952ms/step - loss: 4.0217 - categorical_accuracy: 0.3653

250/250 [==============================] - 259s 1s/step - loss: 0.6103 - categorical_accuracy: 0.8404 - val_loss: 4.0217 - val_categorical_accuracy: 0.3653

Epoch 82/100

15/15 [==============================] - 14s 951ms/step - loss: 4.1091 - categorical_accuracy: 0.3695

250/250 [==============================] - 258s 1s/step - loss: 0.6012 - categorical_accuracy: 0.8440 - val_loss: 4.1091 - val_categorical_accuracy: 0.3695

Epoch 83/100

15/15 [==============================] - 14s 936ms/step - loss: 4.3138 - categorical_accuracy: 0.3612

250/250 [==============================] - 258s 1s/step - loss: 0.5759 - categorical_accuracy: 0.8465 - val_loss: 4.3138 - val_categorical_accuracy: 0.3612

Epoch 84/100

15/15 [==============================] - 15s 993ms/step - loss: 4.2785 - categorical_accuracy: 0.3633

250/250 [==============================] - 263s 1s/step - loss: 0.5842 - categorical_accuracy: 0.8461 - val_loss: 4.2785 - val_categorical_accuracy: 0.3633

Epoch 85/100

15/15 [==============================] - 14s 942ms/step - loss: 4.4895 - categorical_accuracy: 0.3507

250/250 [==============================] - 257s 1s/step - loss: 0.5625 - categorical_accuracy: 0.8519 - val_loss: 4.4895 - val_categorical_accuracy: 0.3507

Epoch 86/100

15/15 [==============================] - 14s 946ms/step - loss: 4.0008 - categorical_accuracy: 0.3841

250/250 [==============================] - 258s 1s/step - loss: 0.5547 - categorical_accuracy: 0.8559 - val_loss: 4.0008 - val_categorical_accuracy: 0.3841

Epoch 87/100

15/15 [==============================] - 14s 924ms/step - loss: 4.3086 - categorical_accuracy: 0.3591

250/250 [==============================] - 257s 1s/step - loss: 0.5477 - categorical_accuracy: 0.8593 - val_loss: 4.3086 - val_categorical_accuracy: 0.3591

Epoch 88/100

15/15 [==============================] - 14s 932ms/step - loss: 4.1903 - categorical_accuracy: 0.3633

250/250 [==============================] - 258s 1s/step - loss: 0.5408 - categorical_accuracy: 0.8590 - val_loss: 4.1903 - val_categorical_accuracy: 0.3633

Epoch 89/100

15/15 [==============================] - 15s 983ms/step - loss: 3.9609 - categorical_accuracy: 0.3925

250/250 [==============================] - 262s 1s/step - loss: 0.5284 - categorical_accuracy: 0.8569 - val_loss: 3.9609 - val_categorical_accuracy: 0.3925

Epoch 90/100

15/15 [==============================] - 15s 969ms/step - loss: 4.3176 - categorical_accuracy: 0.3319

250/250 [==============================] - 257s 1s/step - loss: 0.5195 - categorical_accuracy: 0.8571 - val_loss: 4.3176 - val_categorical_accuracy: 0.3319

Epoch 91/100

15/15 [==============================] - 15s 1s/step - loss: 4.3151 - categorical_accuracy: 0.3612

250/250 [==============================] - 258s 1s/step - loss: 0.4904 - categorical_accuracy: 0.8698 - val_loss: 4.3151 - val_categorical_accuracy: 0.3612

Epoch 92/100

15/15 [==============================] - 15s 967ms/step - loss: 4.1877 - categorical_accuracy: 0.3612

250/250 [==============================] - 257s 1s/step - loss: 0.5116 - categorical_accuracy: 0.8645 - val_loss: 4.1877 - val_categorical_accuracy: 0.3612

Epoch 93/100

15/15 [==============================] - 15s 982ms/step - loss: 4.2800 - categorical_accuracy: 0.3674

250/250 [==============================] - 256s 1s/step - loss: 0.4985 - categorical_accuracy: 0.8670 - val_loss: 4.2800 - val_categorical_accuracy: 0.3674

Epoch 94/100

15/15 [==============================] - 14s 923ms/step - loss: 4.2524 - categorical_accuracy: 0.3674

250/250 [==============================] - 256s 1s/step - loss: 0.5113 - categorical_accuracy: 0.8652 - val_loss: 4.2524 - val_categorical_accuracy: 0.3674

Epoch 95/100

15/15 [==============================] - 15s 987ms/step - loss: 4.3309 - categorical_accuracy: 0.3633

250/250 [==============================] - 260s 1s/step - loss: 0.4773 - categorical_accuracy: 0.8708 - val_loss: 4.3309 - val_categorical_accuracy: 0.3633

Epoch 96/100

15/15 [==============================] - 14s 926ms/step - loss: 4.3465 - categorical_accuracy: 0.3674

250/250 [==============================] - 255s 1s/step - loss: 0.4611 - categorical_accuracy: 0.8808 - val_loss: 4.3465 - val_categorical_accuracy: 0.3674

Epoch 97/100

15/15 [==============================] - 14s 925ms/step - loss: 4.3581 - categorical_accuracy: 0.3820

250/250 [==============================] - 259s 1s/step - loss: 0.4688 - categorical_accuracy: 0.8735 - val_loss: 4.3581 - val_categorical_accuracy: 0.3820

Epoch 98/100

15/15 [==============================] - 15s 990ms/step - loss: 4.3597 - categorical_accuracy: 0.3779

250/250 [==============================] - 260s 1s/step - loss: 0.4677 - categorical_accuracy: 0.8754 - val_loss: 4.3597 - val_categorical_accuracy: 0.3779

Epoch 99/100

15/15 [==============================] - 15s 973ms/step - loss: 4.5483 - categorical_accuracy: 0.3674

250/250 [==============================] - 258s 1s/step - loss: 0.4481 - categorical_accuracy: 0.8826 - val_loss: 4.5483 - val_categorical_accuracy: 0.3674

Epoch 100/100

15/15 [==============================] - 15s 971ms/step - loss: 4.4849 - categorical_accuracy: 0.3674

250/250 [==============================] - 259s 1s/step - loss: 0.4404 - categorical_accuracy: 0.8810 - val_loss: 4.4849 - val_categorical_accuracy: 0.3674

# Save model performance in order to plot / compare later

numpy.savetxt('ImageClass_loss_history.txt',

numpy.array(imageClass_history.history['loss']), delimiter = ',')

numpy.savetxt('ImageClass_acc_history.txt',

numpy.array(imageClass_history.history['categorical_accuracy']), delimiter = ',')

Section 5: Image classification model Inference in VM before deploying to TX2

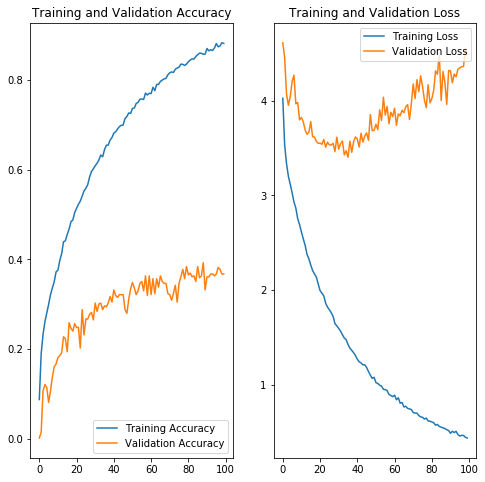

The code below depicts the training run for the code provided above. The code base for both image classification (plant type) as well as binary classification (poison vs edible) is the same and won't be duplicated. The only differences between the two are the accuracy (categorical vs binary) - and the chosen optimum model. The following discussion details the training of an image classification model - followed by the binary model.

# Visualize model performance

import matplotlib.pyplot as plt

acc = imageClass_history.history['categorical_accuracy']

val_acc = imageClass_history.history['val_categorical_accuracy']

loss = imageClass_history.history['loss']

val_loss = imageClass_history.history['val_loss']

epochs_range = range(NUM_EPOCHS)

plt.figure(figsize=(8, 8))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()

|

The accuracy and loss plots above suggest that we are potentially over-fitting our model towards the training dataset. We can see that high accuracy is achieved in the image classification - which is typically hard given the number of classes we are trying to predict. After 100 epochs we are achieving >90% accuracy on the training set, but <40% accuracy on the validation set. Additionally, validation accuracy appears to have plateaued at this level.

Similarly if we analyze the loss graph, we can see that loss is minimized for the validation dataset near 40 epochs and begins to rise - while the training loss continues to decrease. This structure was similar amongst the models that were analyzed using different layer and node architectures as well as activation functions.

In the future we would like to: - Change the dropout rate - perhaps 0.3 is too low and allows the deep model to over-fit - Use larger datasets. Perhaps there is a feature contained within the image augmented photos that the model is fitting to - whereby the validation dataset doesn't have augmented photos. The purpose of image augmentation is to increase the training dataset size as well as improve model performance to a variety of photos. This will need further investigation to prove whether this assumption is true.

It should be noted that our best performance was found when we enabled training on the base model layers (ResNet50). Presumably the residual layer architecture helped improve training inference above and beyond what the additional layers on top of the base model could provide. While this did impact training time on the VM (~36sec per epoch vs ~24 sec/epoch) the increase is marginal. Since training is done offline (only model inference at the edge needs to be performant) the tradeoff in training time vs accuracy is worthwhile.

While this model can further be optimized in order to increase validation accuracy - we understand that image classification - especially given the dataset similarity (all pictures of plants), can be difficult. We have chosen to move forward with this model which is lean (only uses 2 dense layers on top of the ResNet50 base model). This should help with model performance on the edge device. Future work will continue to improve model performance while also retaining a small network size.

Save Model for deployment

imageClass_finetune_model

<tensorflow.python.keras.engine.training.Model at 0x7facc022eef0>

os.makedirs('./model', exist_ok=True)

imageClass_finetune_model.save('./model/imageclass_model2.h5')

from keras import backend as K

import tensorflow as tf

from tensorflow.python.keras.models import load_model

# This line must be executed before loading Keras model.

K.set_learning_phase(0)

Using TensorFlow backend.

imageClass_model = load_model('./model/imageclass_model2.h5')

imageClass_model

<tensorflow.python.keras.engine.training.Model at 0x7fd41c59eda0>

imageClass_model.summary()

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) (None, 300, 300, 3) 0

__________________________________________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 306, 306, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv1 (Conv2D) (None, 150, 150, 64) 9472 conv1_pad[0][0]

__________________________________________________________________________________________________

bn_conv1 (BatchNormalizationV1) (None, 150, 150, 64) 256 conv1[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 150, 150, 64) 0 bn_conv1[0][0]

__________________________________________________________________________________________________

pool1_pad (ZeroPadding2D) (None, 152, 152, 64) 0 activation[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 75, 75, 64) 0 pool1_pad[0][0]

__________________________________________________________________________________________________

res2a_branch2a (Conv2D) (None, 75, 75, 64) 4160 max_pooling2d[0][0]

__________________________________________________________________________________________________

bn2a_branch2a (BatchNormalizati (None, 75, 75, 64) 256 res2a_branch2a[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 75, 75, 64) 0 bn2a_branch2a[0][0]

__________________________________________________________________________________________________

res2a_branch2b (Conv2D) (None, 75, 75, 64) 36928 activation_1[0][0]

__________________________________________________________________________________________________

bn2a_branch2b (BatchNormalizati (None, 75, 75, 64) 256 res2a_branch2b[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 75, 75, 64) 0 bn2a_branch2b[0][0]

__________________________________________________________________________________________________

res2a_branch2c (Conv2D) (None, 75, 75, 256) 16640 activation_2[0][0]

__________________________________________________________________________________________________

res2a_branch1 (Conv2D) (None, 75, 75, 256) 16640 max_pooling2d[0][0]

__________________________________________________________________________________________________

bn2a_branch2c (BatchNormalizati (None, 75, 75, 256) 1024 res2a_branch2c[0][0]

__________________________________________________________________________________________________

bn2a_branch1 (BatchNormalizatio (None, 75, 75, 256) 1024 res2a_branch1[0][0]

__________________________________________________________________________________________________

add (Add) (None, 75, 75, 256) 0 bn2a_branch2c[0][0]

bn2a_branch1[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 75, 75, 256) 0 add[0][0]

__________________________________________________________________________________________________

res2b_branch2a (Conv2D) (None, 75, 75, 64) 16448 activation_3[0][0]

__________________________________________________________________________________________________

bn2b_branch2a (BatchNormalizati (None, 75, 75, 64) 256 res2b_branch2a[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 75, 75, 64) 0 bn2b_branch2a[0][0]

__________________________________________________________________________________________________

res2b_branch2b (Conv2D) (None, 75, 75, 64) 36928 activation_4[0][0]

__________________________________________________________________________________________________

bn2b_branch2b (BatchNormalizati (None, 75, 75, 64) 256 res2b_branch2b[0][0]

__________________________________________________________________________________________________

activation_5 (Activation) (None, 75, 75, 64) 0 bn2b_branch2b[0][0]

__________________________________________________________________________________________________

res2b_branch2c (Conv2D) (None, 75, 75, 256) 16640 activation_5[0][0]

__________________________________________________________________________________________________

bn2b_branch2c (BatchNormalizati (None, 75, 75, 256) 1024 res2b_branch2c[0][0]

__________________________________________________________________________________________________

add_1 (Add) (None, 75, 75, 256) 0 bn2b_branch2c[0][0]

activation_3[0][0]

__________________________________________________________________________________________________

activation_6 (Activation) (None, 75, 75, 256) 0 add_1[0][0]

__________________________________________________________________________________________________

res2c_branch2a (Conv2D) (None, 75, 75, 64) 16448 activation_6[0][0]

__________________________________________________________________________________________________

bn2c_branch2a (BatchNormalizati (None, 75, 75, 64) 256 res2c_branch2a[0][0]

__________________________________________________________________________________________________

activation_7 (Activation) (None, 75, 75, 64) 0 bn2c_branch2a[0][0]

__________________________________________________________________________________________________

res2c_branch2b (Conv2D) (None, 75, 75, 64) 36928 activation_7[0][0]

__________________________________________________________________________________________________

bn2c_branch2b (BatchNormalizati (None, 75, 75, 64) 256 res2c_branch2b[0][0]

__________________________________________________________________________________________________

activation_8 (Activation) (None, 75, 75, 64) 0 bn2c_branch2b[0][0]

__________________________________________________________________________________________________

res2c_branch2c (Conv2D) (None, 75, 75, 256) 16640 activation_8[0][0]

__________________________________________________________________________________________________

bn2c_branch2c (BatchNormalizati (None, 75, 75, 256) 1024 res2c_branch2c[0][0]

__________________________________________________________________________________________________

add_2 (Add) (None, 75, 75, 256) 0 bn2c_branch2c[0][0]

activation_6[0][0]

__________________________________________________________________________________________________

activation_9 (Activation) (None, 75, 75, 256) 0 add_2[0][0]

__________________________________________________________________________________________________

res3a_branch2a (Conv2D) (None, 38, 38, 128) 32896 activation_9[0][0]

__________________________________________________________________________________________________

bn3a_branch2a (BatchNormalizati (None, 38, 38, 128) 512 res3a_branch2a[0][0]

__________________________________________________________________________________________________

activation_10 (Activation) (None, 38, 38, 128) 0 bn3a_branch2a[0][0]

__________________________________________________________________________________________________

res3a_branch2b (Conv2D) (None, 38, 38, 128) 147584 activation_10[0][0]

__________________________________________________________________________________________________

bn3a_branch2b (BatchNormalizati (None, 38, 38, 128) 512 res3a_branch2b[0][0]

__________________________________________________________________________________________________

activation_11 (Activation) (None, 38, 38, 128) 0 bn3a_branch2b[0][0]

__________________________________________________________________________________________________

res3a_branch2c (Conv2D) (None, 38, 38, 512) 66048 activation_11[0][0]

__________________________________________________________________________________________________

res3a_branch1 (Conv2D) (None, 38, 38, 512) 131584 activation_9[0][0]

__________________________________________________________________________________________________

bn3a_branch2c (BatchNormalizati (None, 38, 38, 512) 2048 res3a_branch2c[0][0]

__________________________________________________________________________________________________

bn3a_branch1 (BatchNormalizatio (None, 38, 38, 512) 2048 res3a_branch1[0][0]

__________________________________________________________________________________________________

add_3 (Add) (None, 38, 38, 512) 0 bn3a_branch2c[0][0]

bn3a_branch1[0][0]

__________________________________________________________________________________________________

activation_12 (Activation) (None, 38, 38, 512) 0 add_3[0][0]

__________________________________________________________________________________________________

res3b_branch2a (Conv2D) (None, 38, 38, 128) 65664 activation_12[0][0]

__________________________________________________________________________________________________

bn3b_branch2a (BatchNormalizati (None, 38, 38, 128) 512 res3b_branch2a[0][0]

__________________________________________________________________________________________________

activation_13 (Activation) (None, 38, 38, 128) 0 bn3b_branch2a[0][0]

__________________________________________________________________________________________________

res3b_branch2b (Conv2D) (None, 38, 38, 128) 147584 activation_13[0][0]

__________________________________________________________________________________________________

bn3b_branch2b (BatchNormalizati (None, 38, 38, 128) 512 res3b_branch2b[0][0]

__________________________________________________________________________________________________

activation_14 (Activation) (None, 38, 38, 128) 0 bn3b_branch2b[0][0]

__________________________________________________________________________________________________

res3b_branch2c (Conv2D) (None, 38, 38, 512) 66048 activation_14[0][0]

__________________________________________________________________________________________________

bn3b_branch2c (BatchNormalizati (None, 38, 38, 512) 2048 res3b_branch2c[0][0]

__________________________________________________________________________________________________

add_4 (Add) (None, 38, 38, 512) 0 bn3b_branch2c[0][0]

activation_12[0][0]

__________________________________________________________________________________________________

activation_15 (Activation) (None, 38, 38, 512) 0 add_4[0][0]

__________________________________________________________________________________________________

res3c_branch2a (Conv2D) (None, 38, 38, 128) 65664 activation_15[0][0]

__________________________________________________________________________________________________

bn3c_branch2a (BatchNormalizati (None, 38, 38, 128) 512 res3c_branch2a[0][0]

__________________________________________________________________________________________________

activation_16 (Activation) (None, 38, 38, 128) 0 bn3c_branch2a[0][0]

__________________________________________________________________________________________________

res3c_branch2b (Conv2D) (None, 38, 38, 128) 147584 activation_16[0][0]

__________________________________________________________________________________________________

bn3c_branch2b (BatchNormalizati (None, 38, 38, 128) 512 res3c_branch2b[0][0]

__________________________________________________________________________________________________

activation_17 (Activation) (None, 38, 38, 128) 0 bn3c_branch2b[0][0]

__________________________________________________________________________________________________

res3c_branch2c (Conv2D) (None, 38, 38, 512) 66048 activation_17[0][0]

__________________________________________________________________________________________________

bn3c_branch2c (BatchNormalizati (None, 38, 38, 512) 2048 res3c_branch2c[0][0]

__________________________________________________________________________________________________

add_5 (Add) (None, 38, 38, 512) 0 bn3c_branch2c[0][0]

activation_15[0][0]

__________________________________________________________________________________________________

activation_18 (Activation) (None, 38, 38, 512) 0 add_5[0][0]

__________________________________________________________________________________________________

res3d_branch2a (Conv2D) (None, 38, 38, 128) 65664 activation_18[0][0]

__________________________________________________________________________________________________

bn3d_branch2a (BatchNormalizati (None, 38, 38, 128) 512 res3d_branch2a[0][0]

__________________________________________________________________________________________________

activation_19 (Activation) (None, 38, 38, 128) 0 bn3d_branch2a[0][0]

__________________________________________________________________________________________________

res3d_branch2b (Conv2D) (None, 38, 38, 128) 147584 activation_19[0][0]

__________________________________________________________________________________________________

bn3d_branch2b (BatchNormalizati (None, 38, 38, 128) 512 res3d_branch2b[0][0]

__________________________________________________________________________________________________

activation_20 (Activation) (None, 38, 38, 128) 0 bn3d_branch2b[0][0]

__________________________________________________________________________________________________

res3d_branch2c (Conv2D) (None, 38, 38, 512) 66048 activation_20[0][0]

__________________________________________________________________________________________________

bn3d_branch2c (BatchNormalizati (None, 38, 38, 512) 2048 res3d_branch2c[0][0]

__________________________________________________________________________________________________

add_6 (Add) (None, 38, 38, 512) 0 bn3d_branch2c[0][0]

activation_18[0][0]

__________________________________________________________________________________________________

activation_21 (Activation) (None, 38, 38, 512) 0 add_6[0][0]

__________________________________________________________________________________________________

res4a_branch2a (Conv2D) (None, 19, 19, 256) 131328 activation_21[0][0]

__________________________________________________________________________________________________

bn4a_branch2a (BatchNormalizati (None, 19, 19, 256) 1024 res4a_branch2a[0][0]

__________________________________________________________________________________________________

activation_22 (Activation) (None, 19, 19, 256) 0 bn4a_branch2a[0][0]

__________________________________________________________________________________________________

res4a_branch2b (Conv2D) (None, 19, 19, 256) 590080 activation_22[0][0]

__________________________________________________________________________________________________

bn4a_branch2b (BatchNormalizati (None, 19, 19, 256) 1024 res4a_branch2b[0][0]

__________________________________________________________________________________________________

activation_23 (Activation) (None, 19, 19, 256) 0 bn4a_branch2b[0][0]

__________________________________________________________________________________________________

res4a_branch2c (Conv2D) (None, 19, 19, 1024) 263168 activation_23[0][0]

__________________________________________________________________________________________________

res4a_branch1 (Conv2D) (None, 19, 19, 1024) 525312 activation_21[0][0]

__________________________________________________________________________________________________

bn4a_branch2c (BatchNormalizati (None, 19, 19, 1024) 4096 res4a_branch2c[0][0]

__________________________________________________________________________________________________

bn4a_branch1 (BatchNormalizatio (None, 19, 19, 1024) 4096 res4a_branch1[0][0]

__________________________________________________________________________________________________

add_7 (Add) (None, 19, 19, 1024) 0 bn4a_branch2c[0][0]

bn4a_branch1[0][0]

__________________________________________________________________________________________________

activation_24 (Activation) (None, 19, 19, 1024) 0 add_7[0][0]

__________________________________________________________________________________________________

res4b_branch2a (Conv2D) (None, 19, 19, 256) 262400 activation_24[0][0]

__________________________________________________________________________________________________

bn4b_branch2a (BatchNormalizati (None, 19, 19, 256) 1024 res4b_branch2a[0][0]

__________________________________________________________________________________________________

activation_25 (Activation) (None, 19, 19, 256) 0 bn4b_branch2a[0][0]

__________________________________________________________________________________________________

res4b_branch2b (Conv2D) (None, 19, 19, 256) 590080 activation_25[0][0]

__________________________________________________________________________________________________

bn4b_branch2b (BatchNormalizati (None, 19, 19, 256) 1024 res4b_branch2b[0][0]

__________________________________________________________________________________________________

activation_26 (Activation) (None, 19, 19, 256) 0 bn4b_branch2b[0][0]

__________________________________________________________________________________________________

res4b_branch2c (Conv2D) (None, 19, 19, 1024) 263168 activation_26[0][0]

__________________________________________________________________________________________________

bn4b_branch2c (BatchNormalizati (None, 19, 19, 1024) 4096 res4b_branch2c[0][0]

__________________________________________________________________________________________________

add_8 (Add) (None, 19, 19, 1024) 0 bn4b_branch2c[0][0]

activation_24[0][0]

__________________________________________________________________________________________________

activation_27 (Activation) (None, 19, 19, 1024) 0 add_8[0][0]

__________________________________________________________________________________________________

res4c_branch2a (Conv2D) (None, 19, 19, 256) 262400 activation_27[0][0]

__________________________________________________________________________________________________

bn4c_branch2a (BatchNormalizati (None, 19, 19, 256) 1024 res4c_branch2a[0][0]

__________________________________________________________________________________________________

activation_28 (Activation) (None, 19, 19, 256) 0 bn4c_branch2a[0][0]

__________________________________________________________________________________________________

res4c_branch2b (Conv2D) (None, 19, 19, 256) 590080 activation_28[0][0]

__________________________________________________________________________________________________

bn4c_branch2b (BatchNormalizati (None, 19, 19, 256) 1024 res4c_branch2b[0][0]

__________________________________________________________________________________________________

activation_29 (Activation) (None, 19, 19, 256) 0 bn4c_branch2b[0][0]

__________________________________________________________________________________________________

res4c_branch2c (Conv2D) (None, 19, 19, 1024) 263168 activation_29[0][0]

__________________________________________________________________________________________________

bn4c_branch2c (BatchNormalizati (None, 19, 19, 1024) 4096 res4c_branch2c[0][0]

__________________________________________________________________________________________________

add_9 (Add) (None, 19, 19, 1024) 0 bn4c_branch2c[0][0]

activation_27[0][0]

__________________________________________________________________________________________________

activation_30 (Activation) (None, 19, 19, 1024) 0 add_9[0][0]

__________________________________________________________________________________________________

res4d_branch2a (Conv2D) (None, 19, 19, 256) 262400 activation_30[0][0]

__________________________________________________________________________________________________

bn4d_branch2a (BatchNormalizati (None, 19, 19, 256) 1024 res4d_branch2a[0][0]

__________________________________________________________________________________________________

activation_31 (Activation) (None, 19, 19, 256) 0 bn4d_branch2a[0][0]

__________________________________________________________________________________________________

res4d_branch2b (Conv2D) (None, 19, 19, 256) 590080 activation_31[0][0]

__________________________________________________________________________________________________

bn4d_branch2b (BatchNormalizati (None, 19, 19, 256) 1024 res4d_branch2b[0][0]

__________________________________________________________________________________________________

activation_32 (Activation) (None, 19, 19, 256) 0 bn4d_branch2b[0][0]

__________________________________________________________________________________________________

res4d_branch2c (Conv2D) (None, 19, 19, 1024) 263168 activation_32[0][0]

__________________________________________________________________________________________________

bn4d_branch2c (BatchNormalizati (None, 19, 19, 1024) 4096 res4d_branch2c[0][0]

__________________________________________________________________________________________________

add_10 (Add) (None, 19, 19, 1024) 0 bn4d_branch2c[0][0]

activation_30[0][0]

__________________________________________________________________________________________________

activation_33 (Activation) (None, 19, 19, 1024) 0 add_10[0][0]

__________________________________________________________________________________________________

res4e_branch2a (Conv2D) (None, 19, 19, 256) 262400 activation_33[0][0]

__________________________________________________________________________________________________

bn4e_branch2a (BatchNormalizati (None, 19, 19, 256) 1024 res4e_branch2a[0][0]

__________________________________________________________________________________________________

activation_34 (Activation) (None, 19, 19, 256) 0 bn4e_branch2a[0][0]

__________________________________________________________________________________________________

res4e_branch2b (Conv2D) (None, 19, 19, 256) 590080 activation_34[0][0]

__________________________________________________________________________________________________

bn4e_branch2b (BatchNormalizati (None, 19, 19, 256) 1024 res4e_branch2b[0][0]

__________________________________________________________________________________________________

activation_35 (Activation) (None, 19, 19, 256) 0 bn4e_branch2b[0][0]

__________________________________________________________________________________________________

res4e_branch2c (Conv2D) (None, 19, 19, 1024) 263168 activation_35[0][0]

__________________________________________________________________________________________________

bn4e_branch2c (BatchNormalizati (None, 19, 19, 1024) 4096 res4e_branch2c[0][0]

__________________________________________________________________________________________________

add_11 (Add) (None, 19, 19, 1024) 0 bn4e_branch2c[0][0]

activation_33[0][0]

__________________________________________________________________________________________________

activation_36 (Activation) (None, 19, 19, 1024) 0 add_11[0][0]

__________________________________________________________________________________________________

res4f_branch2a (Conv2D) (None, 19, 19, 256) 262400 activation_36[0][0]

__________________________________________________________________________________________________

bn4f_branch2a (BatchNormalizati (None, 19, 19, 256) 1024 res4f_branch2a[0][0]

__________________________________________________________________________________________________

activation_37 (Activation) (None, 19, 19, 256) 0 bn4f_branch2a[0][0]

__________________________________________________________________________________________________

res4f_branch2b (Conv2D) (None, 19, 19, 256) 590080 activation_37[0][0]

__________________________________________________________________________________________________

bn4f_branch2b (BatchNormalizati (None, 19, 19, 256) 1024 res4f_branch2b[0][0]

__________________________________________________________________________________________________

activation_38 (Activation) (None, 19, 19, 256) 0 bn4f_branch2b[0][0]

__________________________________________________________________________________________________

res4f_branch2c (Conv2D) (None, 19, 19, 1024) 263168 activation_38[0][0]