Music Churn Prediction

Overview of Notebooks

For this project, the team created 3 separate Jupyter Notebooks to document its work:

1) Data Preparation / Feature Extraction Notebook: This notebook gives an overview of the project, and then takes the raw data, performs some initial exploration, and generates features for the predictive models. It also performs a brief exploratory data analysis on the feature set. The output this notebook output a .pkl file of features for the second notebook to read, which saves considerable time when building the models.

2) Predictive Modeling Notebook: This notebook reads the .pkl file, builds machine learning models to predict user churn, calculates and calibrates churn probabilities, and generates a projected economic impact of users who leave.

3) Initial Data Sourcing and Validation Notebook (HTML file): This is a static notebook (uploaded as HTML file - not intended for executing code) that documents two other aspects of the project that don't logically fit in either of the first two notebooks:

-

First, it contains the initial data extraction code used in Google BigQuery to reduce the data set from ~30GB down to ~1.6GB, to enable it to run on local machines.

-

Second, it contains some code that performs data integrity checks, validating that the items extracted in our smaller data set approximately match those in the full data set (e.g., same level of churn, the same timeframe, etc.)

Table of Contents (this notebook only)

- Project Overview

- Data Set Overview

- Initial Data Loading

- User Logs Data: Preparation and Feature Extraction

- Transaction Data: Preparation and Feature Extraction

- Joining Features and Data Manipulation

- Quick Exploratory Data Analysis

- Writing Output

1. Project Overview

This dataset is comprised of data collected by WSDM regarding a music streaming subscription available through KKBOX.

Project Goals:

The project aims to accomplish the following goals:

- Create a model to predict customer churn from usage and transaction data

- Create an economic model for retention

- Recommend a process for keeping the churn and economic retention models updated with latest information

2. Data Set Overview

The initial data set contains 24 variables (25 input variables and 1 variable to predict), these are spread across 4 tables. Additional details:

- Original format: csv

- Total Size: 31.14 GB, reduced to 1.6GB for analysis on local machines.

- User Count: 1.02 million labeled users contained in the Train table (88,544 users after reduction)

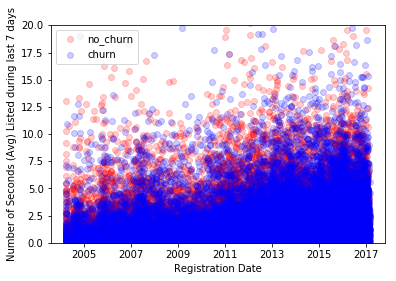

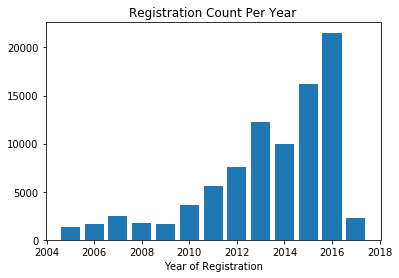

- Date Range: The data of customer usage and trasactions with the service spans 26 months, from Jan. 2015 to Feb. 2017. However, one of the data fields is initial date users joined the service, with dates ranging from 2004 to 2017.

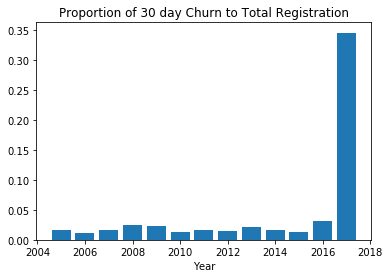

- Balance: Approximately 6% of users in the data churned (positive labels); the remaining 94% stayed (negative labels).

Listed below are the tables and variables or features available for study:

Table: Transactions

This table contains transaction data for each user. Each row is a payment transaction.

- Data Shape: 21.5M rows X 9 columns

- Data Size: 1.6GB

Data Fields:

- Msno: User ID

- Payment_method_id: Payment Method

- Payment_plan_days: Length of plan

- Plan_list_price: Price for the plan

- Actual_amount_paid: Amount paid

- Is_auto_renew: T/F flag determining whether membership is auto-renew or not

- Transaction Date: Date of purchase

- Membership_expire_date: Expiry date

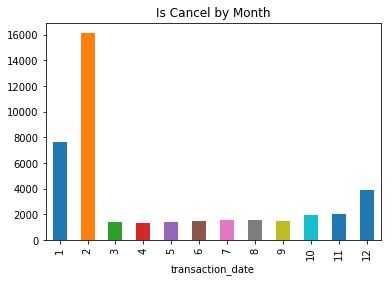

- Is_cancel: T/F flag determining whether or not the user canceled service. This field is correlated with the is_churn category, though it isn’t identical, as it also captures users who change service.

Table: User Logs

This table lists who, how, and when users used the service. Each row is a unique user-date combination.

- Data Shape: 392M rows X 9 columns

- Data Size: 29.1GB

Data Fields:

- Msno: User ID

- Date: Date of the logged activity

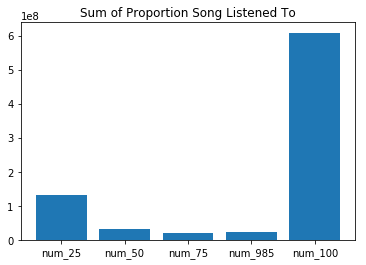

- Num_25: Number of songs played < 25% of song length

- Num_50: Number of songs played between 25% and 50%

- Num_75: Number of songs played between 50% and 75%

- Num_985: Number of songs played between 75% and 98.5%

- Num_100: Number of songs played between 98.5% and 100%

- Num_unq: Number of unique songs played

- Total_secs: Total seconds played

Table: Members

Demographic data on each user. Each row represents a unique user.

- Data Shape: 6.8M rows X 6 columns

- Data Size: 0.4GB

Data Fields:

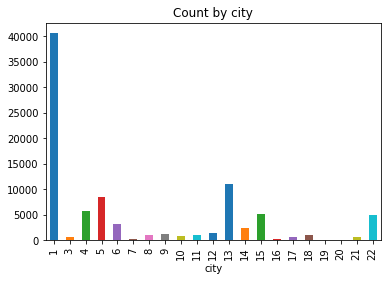

- Msno: User ID

- City: City of the user

- BD: Age of the user

- Gender: Male, Female or Blank

- Registered_via: Registration method

- Registration_init_time: Initial time of registration

- Expiration_date: Expiration of membership

Table: Train

Labels of which users churned. Each row represents a unique user. * Data Shape: 1.0M rows X 2 columns * Data Size: 45MB

Data Fields:

- Msno: User ID

- Is_churn: T/F flag variable we are trying to predict.

3. Initial Data Loading

This analysis is performed in the cells below.

#Import Required Libraries

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline

#Set initial parameter(s)

pd.set_option('display.max_rows', 200)

pd.options.display.max_columns = 2000

Loading the data indexing with the primary key (MSNO: String like/Object, represents the user)

#Load the data

members = pd.read_csv('members_filtered.csv')

transactions = pd.read_csv('transactions_filtered.csv')

user_logs = pd.read_csv('user_logs_filtered.csv')

labels = pd.read_csv('labels_filtered.csv')

#Set indices

members.set_index('msno', inplace = True)

labels.set_index('msno', inplace = True)

user_logs.head()

| msno | date | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | MVODUEUlSocm1sXa+zVGpJazPrRFiD4IzEQk0QCdg4U= | 20170217 | 37 | 2 | 2 | 3 | 30 | 66 | 9022.818 |

| 1 | o3Dg7baW8dXq7Jq7NzlVrWG4mZNVvqp62oWBDO/ybeE= | 20160209 | 36 | 5 | 2 | 3 | 48 | 71 | 13895.453 |

| 2 | 6ERcO7aqAKvrQ2CAvah79dVC7tJVZSjNti1MBfpNVW4= | 20151210 | 26 | 9 | 3 | 0 | 51 | 54 | 13919.805 |

| 3 | Xt9VAHNtHuST21tkcZSnGKjwv8vF8/COnsf6z28+fKk= | 20161025 | 22 | 8 | 4 | 2 | 49 | 75 | 15147.842 |

| 4 | zSgTJqoosTiFF7ZZi1DPTHgxLbnd99IgOEsTIDCcZHc= | 20160904 | 26 | 3 | 1 | 0 | 39 | 60 | 10558.829 |

Performing a quick inspection of the data:

print('Transactions: \n')

transactions.info()

print('User Logs: \n')

user_logs.info()

print('Members: \n')

members.info()

print('User Logs:')

labels.info()

Transactions:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1353459 entries, 0 to 1353458

Data columns (total 9 columns):

msno 1353459 non-null object

payment_method_id 1353459 non-null int64

payment_plan_days 1353459 non-null int64

plan_list_price 1353459 non-null int64

actual_amount_paid 1353459 non-null int64

is_auto_renew 1353459 non-null int64

transaction_date 1353459 non-null int64

membership_expire_date 1353459 non-null int64

is_cancel 1353459 non-null int64

dtypes: int64(8), object(1)

memory usage: 92.9+ MB

User Logs:

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 19710631 entries, 0 to 19710630

Data columns (total 9 columns):

msno object

date int64

num_25 int64

num_50 int64

num_75 int64

num_985 int64

num_100 int64

num_unq int64

total_secs float64

dtypes: float64(1), int64(7), object(1)

memory usage: 1.3+ GB

Members:

<class 'pandas.core.frame.DataFrame'>

Index: 89473 entries, mKfgXQAmVeSKzN4rXW37qz0HbGCuYBspTBM3ONXZudg= to EFbHYa9/MiKYiyrl05cZ34Cky0FDeHxTYij0pXwkr2A=

Data columns (total 5 columns):

city 89473 non-null int64

bd 89473 non-null int64

gender 46137 non-null object

registered_via 89473 non-null int64

registration_init_time 89473 non-null int64

dtypes: int64(4), object(1)

memory usage: 4.1+ MB

User Logs:

<class 'pandas.core.frame.DataFrame'>

Index: 99825 entries, 3lh94wH+UPK7ENgnA5svzFMYfJJRMZHU/WjgvhRJPzc= to DIgxCOJBeanFdqLOOPMTzwwkqgREVG+g1pwfY5LWvC4=

Data columns (total 1 columns):

is_churn 99825 non-null int64

dtypes: int64(1)

memory usage: 1.5+ MB

Helper routine to format the date for visualization:

def pd_to_date(df_col):

"""Function to convert a pandas dataframe column from %Y%m%d format to datetime format.

Args:

df_col (column in a pandas dataframe): The column to be changed.

Returns:

The same column in datetime format.

"""

df_col = pd.to_datetime(df_col, format = '%Y%m%d')

return df_col

#Convert date column to date format

user_logs['date'] = pd_to_date(user_logs['date'])

The next two sections prepare the 2 major data tables/frames (User Logs & Transactions) independently and then bring them together for analysis.

4. User Logs Data: Preparation and Feature Extraction

We first create our groupby object to ultimately aggregate data by users:

#Create our groupby user object

user_logs_gb = user_logs.groupby(['msno'], sort=False)

The next cell creates three new columns:

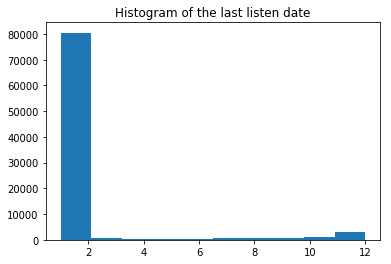

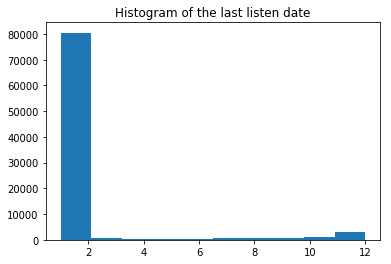

- max_date: The latest date each user has a transaction

- days_before_max_date: The the number of days between the max date and the date of the current record.

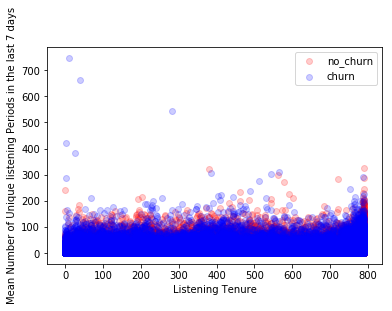

- listening_tenure: The the number of days between the max date and min date of the current user. The hypothesis for this feature is that a user who's been using the service for a long time may be less likely to churn than one who's been using the service for a short time.

#Append max date to every row in main table

user_logs['max_date'] = user_logs_gb['date'].transform('max')

user_logs['days_before_max_date'] = (user_logs['max_date'] - user_logs['date']).apply(lambda x: x.days)

#The .apply(lambda... just converts it from datetime to an integer, for easier comparisons later.

#Generate user's first date, last date, and tenure

#Also, the user_logs_features table will be the primary table to return from the transactions table

user_logs_features = (user_logs_gb

.agg({'date':['max', 'min', lambda x: (max(x) - min(x)).days]}) #.days converts to int

.rename(columns={'max': 'max_date', 'min': 'min_date','<lambda>':'listening_tenure'})

)

#Add a 3rd level, used for joining data later

user_logs_features = pd.concat([user_logs_features], axis=1, keys=['date_features'])

Let's take a look at our initial users table:

user_logs_features.head()

| date_features | |||

|---|---|---|---|

| date | |||

| max_date | min_date | listening_tenure | |

| msno | |||

| MVODUEUlSocm1sXa+zVGpJazPrRFiD4IzEQk0QCdg4U= | 2017-02-27 | 2015-07-11 | 597 |

| o3Dg7baW8dXq7Jq7NzlVrWG4mZNVvqp62oWBDO/ybeE= | 2017-02-07 | 2015-03-10 | 700 |

| 6ERcO7aqAKvrQ2CAvah79dVC7tJVZSjNti1MBfpNVW4= | 2017-02-17 | 2015-01-01 | 778 |

| Xt9VAHNtHuST21tkcZSnGKjwv8vF8/COnsf6z28+fKk= | 2017-02-28 | 2016-09-08 | 173 |

| zSgTJqoosTiFF7ZZi1DPTHgxLbnd99IgOEsTIDCcZHc= | 2017-02-13 | 2015-01-01 | 774 |

We now create features to look at patters of usage over the past X days, where X is days_before_max_date, to see what a user has been doing "lately". We apply this rationale to all of the usage columns in the user_logs table, giving us combinations of the following elements of our data:

- Number of songs played < Y% of song length, where Y is 100, 985, 75, 50, and 25, plus the number of unique songs and total seconds played.

- Activity over the last day, last 7, 30, 90, 180, 365, and total days, noting that the date range is relative to user's most recent activity.

For each of these combinations, we calulate (using groupby and aggregate) both the sum and mean of each feature. And finally we also create a single, total count column (number of rows) for the past number of days. In total, this generates 120 features, which we then append to the user_logs_features table above.

#Create Features:

# Total X=(seconds, 100, 985, 75, 50, 25, unique), avg per day of X, maybe median per day of X

# Last day, last 7 days, last 30 days, last 90, 180, 365, total (note last day is relative to user)

for num_days in [1, 7, 14, 31, 90, 180, 365, 9999]:

#Create groupby object for items with x days

ul_gb_xdays = (user_logs.loc[(user_logs['days_before_max_date'] < num_days)]

.groupby(['msno'], sort=False))

#Generate sum and mean (and count, once) for all the user logs stats

past_xdays_by_user = (ul_gb_xdays

.agg({'num_unq':['sum', 'mean', 'count'],

'total_secs':['sum', 'mean'],

'num_25':['sum', 'mean'],

'num_50':['sum', 'mean'],

'num_75':['sum', 'mean'],

'num_985':['sum', 'mean'],

'num_100':['sum', 'mean'],

})

)

#Append level header

past_xdays_by_user = pd.concat([past_xdays_by_user], axis=1, keys=['within_days_' + str(num_days)])

#Join (append) to user_logs_features table

user_logs_features = user_logs_features.join(past_xdays_by_user, how='inner')

Taking a quick look at our table now:

user_logs_features.head()

| date_features | within_days_1 | within_days_7 | within_days_14 | within_days_31 | within_days_90 | within_days_180 | within_days_365 | within_days_9999 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| date | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | num_unq | total_secs | num_25 | num_50 | num_75 | num_985 | num_100 | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| max_date | min_date | listening_tenure | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | count | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | sum | mean | |

| msno | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| MVODUEUlSocm1sXa+zVGpJazPrRFiD4IzEQk0QCdg4U= | 2017-02-27 | 2015-07-11 | 597 | 17 | 17 | 1 | 29802.123 | 29802.123 | 17 | 17 | 1 | 1 | 0 | 0 | 1 | 1 | 115 | 115 | 161 | 26.833333 | 6 | 72502.206 | 12083.70100 | 99 | 16.500000 | 13 | 2.166667 | 11 | 1.833333 | 9 | 1.50 | 275 | 45.833333 | 478 | 39.833333 | 12 | 154157.897 | 12846.491417 | 207 | 17.250000 | 24 | 2.000000 | 16 | 1.333333 | 19 | 1.583333 | 595 | 49.583333 | 1040 | 41.600000 | 25 | 299571.033 | 11982.841320 | 448 | 17.920000 | 53 | 2.120000 | 27 | 1.080000 | 43 | 1.720000 | 1153 | 46.120000 | 2723 | 43.919355 | 62 | 610185.373 | 9841.699565 | 1227 | 19.790323 | 222 | 3.580645 | 103 | 1.661290 | 132 | 2.129032 | 2244 | 36.193548 | 5805 | 47.975207 | 121 | 1195064.265 | 9876.564174 | 2792 | 23.074380 | 543 | 4.487603 | 261 | 2.157025 | 252 | 2.082645 | 4267 | 35.264463 | 10396 | 45.004329 | 231 | 1989225.131 | 8611.364203 | 4768 | 20.640693 | 1243 | 5.380952 | 513 | 2.220779 | 479 | 2.073593 | 6908 | 29.904762 | 17549 | 46.181579 | 380 | 3134336.415 | 8248.253724 | 10198 | 26.836842 | 2395 | 6.302632 | 972 | 2.557895 | 806 | 2.121053 | 10495 | 27.618421 |

| o3Dg7baW8dXq7Jq7NzlVrWG4mZNVvqp62oWBDO/ybeE= | 2017-02-07 | 2015-03-10 | 700 | 1 | 1 | 1 | 274.176 | 274.176 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 161 | 26.833333 | 6 | 40311.015 | 6718.50250 | 52 | 8.666667 | 7 | 1.166667 | 7 | 1.166667 | 15 | 2.50 | 137 | 22.833333 | 253 | 25.300000 | 10 | 65631.235 | 6563.123500 | 84 | 8.400000 | 16 | 1.600000 | 16 | 1.600000 | 25 | 2.500000 | 218 | 21.800000 | 811 | 38.619048 | 21 | 201286.586 | 9585.075524 | 236 | 11.238095 | 43 | 2.047619 | 38 | 1.809524 | 53 | 2.523810 | 699 | 33.285714 | 1428 | 33.209302 | 43 | 408254.891 | 9494.299791 | 460 | 10.697674 | 78 | 1.813953 | 69 | 1.604651 | 101 | 2.348837 | 1430 | 33.255814 | 1648 | 32.313725 | 51 | 487842.759 | 9565.544294 | 495 | 9.705882 | 92 | 1.803922 | 76 | 1.490196 | 105 | 2.058824 | 1735 | 34.019608 | 3255 | 29.062500 | 112 | 968253.587 | 8645.121312 | 1114 | 9.946429 | 206 | 1.839286 | 164 | 1.464286 | 230 | 2.053571 | 3430 | 30.625000 | 7475 | 25.775862 | 290 | 2826851.820 | 9747.764897 | 3059 | 10.548276 | 692 | 2.386207 | 535 | 1.844828 | 567 | 1.955172 | 10075 | 34.741379 |

| 6ERcO7aqAKvrQ2CAvah79dVC7tJVZSjNti1MBfpNVW4= | 2017-02-17 | 2015-01-01 | 778 | 13 | 13 | 1 | 10363.972 | 10363.972 | 5 | 5 | 1 | 1 | 1 | 1 | 4 | 4 | 41 | 41 | 219 | 43.800000 | 5 | 68118.548 | 13623.70960 | 25 | 5.000000 | 6 | 1.200000 | 6 | 1.200000 | 7 | 1.40 | 289 | 57.800000 | 480 | 48.000000 | 10 | 121094.373 | 12109.437300 | 83 | 8.300000 | 24 | 2.400000 | 9 | 0.900000 | 11 | 1.100000 | 498 | 49.800000 | 870 | 43.500000 | 20 | 210674.360 | 10533.718000 | 190 | 9.500000 | 37 | 1.850000 | 20 | 1.000000 | 28 | 1.400000 | 827 | 41.350000 | 2390 | 44.259259 | 54 | 604775.769 | 11199.551278 | 490 | 9.074074 | 116 | 2.148148 | 79 | 1.462963 | 92 | 1.703704 | 2318 | 42.925926 | 4427 | 41.764151 | 106 | 1186775.568 | 11195.995925 | 750 | 7.075472 | 212 | 2.000000 | 143 | 1.349057 | 179 | 1.688679 | 4631 | 43.688679 | 9683 | 41.917749 | 231 | 2677487.134 | 11590.853394 | 1323 | 5.727273 | 425 | 1.839827 | 340 | 1.471861 | 396 | 1.714286 | 10499 | 45.450216 | 17589 | 35.605263 | 494 | 4836694.885 | 9790.880334 | 2799 | 5.665992 | 1023 | 2.070850 | 772 | 1.562753 | 770 | 1.558704 | 18443 | 37.334008 |

| Xt9VAHNtHuST21tkcZSnGKjwv8vF8/COnsf6z28+fKk= | 2017-02-28 | 2016-09-08 | 173 | 86 | 86 | 1 | 21094.770 | 21094.770 | 10 | 10 | 6 | 6 | 7 | 7 | 4 | 4 | 72 | 72 | 133 | 26.600000 | 5 | 32936.237 | 6587.24740 | 18 | 3.600000 | 8 | 1.600000 | 7 | 1.400000 | 8 | 1.60 | 112 | 22.400000 | 313 | 31.300000 | 10 | 72406.047 | 7240.604700 | 86 | 8.600000 | 18 | 1.800000 | 11 | 1.100000 | 13 | 1.300000 | 251 | 25.100000 | 493 | 21.434783 | 23 | 114170.059 | 4963.915609 | 126 | 5.478261 | 27 | 1.173913 | 16 | 0.695652 | 16 | 0.695652 | 395 | 17.173913 | 2060 | 34.915254 | 59 | 406402.278 | 6888.174203 | 781 | 13.237288 | 116 | 1.966102 | 75 | 1.271186 | 69 | 1.169492 | 1404 | 23.796610 | 3840 | 37.281553 | 103 | 799700.121 | 7764.078845 | 1387 | 13.466019 | 219 | 2.126214 | 159 | 1.543689 | 168 | 1.631068 | 2696 | 26.174757 | 3840 | 37.281553 | 103 | 799700.121 | 7764.078845 | 1387 | 13.466019 | 219 | 2.126214 | 159 | 1.543689 | 168 | 1.631068 | 2696 | 26.174757 | 3840 | 37.281553 | 103 | 799700.121 | 7764.078845 | 1387 | 13.466019 | 219 | 2.126214 | 159 | 1.543689 | 168 | 1.631068 | 2696 | 26.174757 |

| zSgTJqoosTiFF7ZZi1DPTHgxLbnd99IgOEsTIDCcZHc= | 2017-02-13 | 2015-01-01 | 774 | 22 | 22 | 1 | 2803.117 | 2803.117 | 9 | 9 | 0 | 0 | 3 | 3 | 0 | 0 | 10 | 10 | 50 | 12.500000 | 4 | 7935.679 | 1983.91975 | 17 | 4.250000 | 2 | 0.500000 | 3 | 0.750000 | 1 | 0.25 | 31 | 7.750000 | 101 | 14.428571 | 7 | 17605.267 | 2515.038143 | 41 | 5.857143 | 5 | 0.714286 | 4 | 0.571429 | 1 | 0.142857 | 71 | 10.142857 | 301 | 21.500000 | 14 | 40235.862 | 2873.990143 | 148 | 10.571429 | 15 | 1.071429 | 9 | 0.642857 | 7 | 0.500000 | 152 | 10.857143 | 759 | 23.000000 | 33 | 124239.481 | 3764.832758 | 284 | 8.606061 | 28 | 0.848485 | 21 | 0.636364 | 24 | 0.727273 | 466 | 14.121212 | 2722 | 32.023529 | 85 | 461324.690 | 5427.349294 | 1185 | 13.941176 | 96 | 1.129412 | 70 | 0.823529 | 123 | 1.447059 | 1712 | 20.141176 | 9576 | 46.712195 | 205 | 1931032.095 | 9419.668756 | 3431 | 16.736585 | 401 | 1.956098 | 278 | 1.356098 | 376 | 1.834146 | 7359 | 35.897561 | 16913 | 57.921233 | 292 | 3583828.437 | 12273.385058 | 5126 | 17.554795 | 591 | 2.023973 | 440 | 1.506849 | 559 | 1.914384 | 13815 | 47.311644 |

Good, we get the expected number of columns.

5. Transaction Data: Preparation and Feature Extraction

Having completed feature extraction for user logs, we now move on to creating features for the transaction data.

We begin grouping the data by user.

# Grouping by the member (msno)

transactions_gb = transactions.sort_values(["transaction_date"]).groupby(['msno'])

# How many groups i.e. members i.e. msno's. We're good if this is the same number as the members table

print('%d Groups/msnos' %(len(transactions_gb.groups)))

print('%d Features' %(len(transactions.columns)))

99825 Groups/msnos

9 Features

We plan to create the following features from the transactions table: * Simple featuers from the latest transaction * Plan no of days * plan total amount paid * plan list price * Is_auto_renew * is_cancel * Synthetic features from the latest transaction * Plan actual amount paid/day * Aggregate values * Total number of plan days * Total of all the amounts paid for the plan * Comparing transactions * Plan day difference among the latest and previous transaction * Amount paid/day difference among the latest and previous transaction

We begin by creating the total_plan_days and total_amount_paid:

# Features: Total_plan_days, Total_amount_paid

transactions_features = (transactions_gb

.agg({'payment_plan_days':'sum', 'actual_amount_paid':'sum' })

.rename(columns={'payment_plan_days': 'total_plan_days', 'actual_amount_paid': 'total_amount_paid',})

)

print('%d Entries in the DF: ' %(len(transactions_features)))

print('%d Features' %(len(transactions_features.columns)))

transactions_features.head()

99825 Entries in the DF:

2 Features

| total_plan_days | total_amount_paid | |

|---|---|---|

| msno | ||

| +++l/EXNMLTijfLBa8p2TUVVVp2aFGSuUI/h7mLmthw= | 543 | 2831 |

| ++5nv+2nsvrWM7dOT+ZiWJ5uTZOzQS0NEvqu3jidTjU= | 90 | 297 |

| ++7IULiyKbNc8jllqhRuyKZjX1J4mPF4tsudFCJfv4k= | 513 | 2682 |

| ++Ck01c3EF07Ejek2jfXlKut+sEfg+0ry+A5uWeL9vY= | 270 | 891 |

| ++FPL1dXZBXC3Cf6gE0HQiIHg1Pd+DBdK7w52xcUmX0= | 457 | 2235 |

Next, we add amount_paid_per_day for a user's entire tenure:

# Plan actual amount paid/day for all the transactions by a user

# Adding the collumn amount_paid_per_day

transactions_features['amount_paid_per_day'] = (transactions_features['total_amount_paid']

/transactions_features['total_plan_days'])

print('%d Entries in the DF: ' %(len(transactions_features)))

print('%d Features' %(len(transactions_features.columns)))

transactions_features.head()

99825 Entries in the DF:

3 Features

| total_plan_days | total_amount_paid | amount_paid_per_day | |

|---|---|---|---|

| msno | |||

| +++l/EXNMLTijfLBa8p2TUVVVp2aFGSuUI/h7mLmthw= | 543 | 2831 | 5.213628 |

| ++5nv+2nsvrWM7dOT+ZiWJ5uTZOzQS0NEvqu3jidTjU= | 90 | 297 | 3.300000 |

| ++7IULiyKbNc8jllqhRuyKZjX1J4mPF4tsudFCJfv4k= | 513 | 2682 | 5.228070 |

| ++Ck01c3EF07Ejek2jfXlKut+sEfg+0ry+A5uWeL9vY= | 270 | 891 | 3.300000 |

| ++FPL1dXZBXC3Cf6gE0HQiIHg1Pd+DBdK7w52xcUmX0= | 457 | 2235 | 4.890591 |

Next, we add latest_payment_method_id, latest_plan_days, latest_plan_list_price, latest_amount_paid, latest_auto_renew, latest_transaction_date, latest_expire_date, and latest_is_cancel. We accomplish this by picking from the bottom of the ordered (by date) rows in groups.

# Features: latest transaction, renaming the collumns

# V1- Fixed the name for plan_list_price collumn (now called latest_plan_list_price)

latest_transaction= transactions_gb.tail([1]).rename(columns={'payment_method_id': 'latest_payment_method_id',

'payment_plan_days': 'latest_plan_days',

'plan_list_price': 'latest_plan_list_price',

'actual_amount_paid': 'latest_amount_paid',

'is_auto_renew': 'latest_auto_renew',

'transaction_date': 'latest_transaction_date',

'membership_expire_date': 'latest_expire_date',

'is_cancel': 'latest_is_cancel' })

# Index by msno

latest_transaction.set_index('msno', inplace = True)

print('%d Entries in the DF: ' %(len(latest_transaction)))

print('%d Features' %(len(latest_transaction.columns)))

latest_transaction.head()

99825 Entries in the DF:

8 Features

| latest_payment_method_id | latest_plan_days | latest_plan_list_price | latest_amount_paid | latest_auto_renew | latest_transaction_date | latest_expire_date | latest_is_cancel | |

|---|---|---|---|---|---|---|---|---|

| msno | ||||||||

| z1Lm/BlRQraiaWJ7RaQWe0+l0Z40ACj7W+zk29FiaS4= | 38 | 30 | 149 | 149 | 0 | 20150102 | 20150503 | 0 |

| IwE/pih8PuqrY/rsnoZ/4TazDliyH9S8VWNc2/d7mJg= | 38 | 30 | 149 | 149 | 0 | 20150102 | 20150702 | 0 |

| ea9rY0uEPY0ImD2QVbYFb+z3zi5wniKWMUM1V8os7OY= | 32 | 410 | 1788 | 1788 | 0 | 20150104 | 20170213 | 0 |

| plhzwjmNJp0HW04NidfVa35JE216RaFYpSeUCwT11zQ= | 38 | 30 | 149 | 149 | 0 | 20150120 | 20170103 | 0 |

| PbSQ2KxR4gRnzjsRd8Up75qMYb70iuMwGk10/jPRljk= | 38 | 360 | 1200 | 1200 | 0 | 20150123 | 20170212 | 0 |

Next, we add latest_amount_paid_per_day:

# Plan actual amount paid/day for the latest transaction

# Adding the collumn amount_paid_per_day

latest_transaction['latest_amount_paid_per_day'] = (latest_transaction['latest_amount_paid']

/latest_transaction['latest_plan_days'])

print('%d Entries in the DF: ' %(len(latest_transaction)))

print('%d Features' %(len(latest_transaction.columns)))

latest_transaction.head()

99825 Entries in the DF:

9 Features

| latest_payment_method_id | latest_plan_days | latest_plan_list_price | latest_amount_paid | latest_auto_renew | latest_transaction_date | latest_expire_date | latest_is_cancel | latest_amount_paid_per_day | |

|---|---|---|---|---|---|---|---|---|---|

| msno | |||||||||

| z1Lm/BlRQraiaWJ7RaQWe0+l0Z40ACj7W+zk29FiaS4= | 38 | 30 | 149 | 149 | 0 | 20150102 | 20150503 | 0 | 4.966667 |

| IwE/pih8PuqrY/rsnoZ/4TazDliyH9S8VWNc2/d7mJg= | 38 | 30 | 149 | 149 | 0 | 20150102 | 20150702 | 0 | 4.966667 |

| ea9rY0uEPY0ImD2QVbYFb+z3zi5wniKWMUM1V8os7OY= | 32 | 410 | 1788 | 1788 | 0 | 20150104 | 20170213 | 0 | 4.360976 |

| plhzwjmNJp0HW04NidfVa35JE216RaFYpSeUCwT11zQ= | 38 | 30 | 149 | 149 | 0 | 20150120 | 20170103 | 0 | 4.966667 |

| PbSQ2KxR4gRnzjsRd8Up75qMYb70iuMwGk10/jPRljk= | 38 | 360 | 1200 | 1200 | 0 | 20150123 | 20170212 | 0 | 3.333333 |

Next, we compare two different items in our transaction data:

- Plan duration difference among the last 2 transactons

- Cost difference among the last 2 transactions

# Getting the 2 latest transactions and grouping by msno again

latest_transaction2_gb = transactions_gb.tail([2]).groupby(['msno'])

# Getting the latest but one transaction

latest2_transaction = latest_transaction2_gb.head([1])

# Index by msno

latest2_transaction.set_index('msno', inplace = True)

# Amount paid per day for the 2nd latest transaction

latest2_transaction['latest2_amount_paid_per_day'] = (latest2_transaction['actual_amount_paid']

/latest2_transaction['payment_plan_days'])

# Difference in the renewal length among the latest 2 transactions

transactions_features['diff_renewal_duration'] = (latest_transaction['latest_plan_days']

- latest2_transaction['payment_plan_days'])

# Different in plan cost among the latest 2 transactions

transactions_features['diff_plan_amount_paid_per_day'] = (latest_transaction['latest_amount_paid_per_day']

- latest2_transaction['latest2_amount_paid_per_day'])

print('%d Entries in the DF: ' %(len(transactions_features)))

print('%d Features' %(len(transactions_features.columns)))

transactions_features.head()

C:\Users\AOlson\AppData\Local\Continuum\anaconda3\lib\site-packages\ipykernel_launcher.py:12: SettingWithCopyWarning:

A value is trying to be set on a copy of a slice from a DataFrame.

Try using .loc[row_indexer,col_indexer] = value instead

See the caveats in the documentation: http://pandas.pydata.org/pandas-docs/stable/indexing.html#indexing-view-versus-copy

if sys.path[0] == '':

99825 Entries in the DF:

5 Features

| total_plan_days | total_amount_paid | amount_paid_per_day | diff_renewal_duration | diff_plan_amount_paid_per_day | |

|---|---|---|---|---|---|

| msno | |||||

| +++l/EXNMLTijfLBa8p2TUVVVp2aFGSuUI/h7mLmthw= | 543 | 2831 | 5.213628 | 0 | 0.000000 |

| ++5nv+2nsvrWM7dOT+ZiWJ5uTZOzQS0NEvqu3jidTjU= | 90 | 297 | 3.300000 | 0 | 0.000000 |

| ++7IULiyKbNc8jllqhRuyKZjX1J4mPF4tsudFCJfv4k= | 513 | 2682 | 5.228070 | 0 | 0.000000 |

| ++Ck01c3EF07Ejek2jfXlKut+sEfg+0ry+A5uWeL9vY= | 270 | 891 | 3.300000 | 0 | 0.000000 |

| ++FPL1dXZBXC3Cf6gE0HQiIHg1Pd+DBdK7w52xcUmX0= | 457 | 2235 | 4.890591 | 23 | 4.966667 |

Finally, we join all the features into a single data frame:

# Get all transaction features in a single DF

transactions_features = transactions_features.join(latest_transaction, how = 'inner')

# Test

print('%d Entries in the DF: ' %(len(transactions_features)))

print('%d Features' %(len(transactions_features.columns)))

transactions_features.head()

99825 Entries in the DF:

14 Features

| total_plan_days | total_amount_paid | amount_paid_per_day | diff_renewal_duration | diff_plan_amount_paid_per_day | latest_payment_method_id | latest_plan_days | latest_plan_list_price | latest_amount_paid | latest_auto_renew | latest_transaction_date | latest_expire_date | latest_is_cancel | latest_amount_paid_per_day | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| msno | ||||||||||||||

| +++l/EXNMLTijfLBa8p2TUVVVp2aFGSuUI/h7mLmthw= | 543 | 2831 | 5.213628 | 0 | 0.000000 | 39 | 30 | 149 | 149 | 1 | 20170131 | 20170319 | 0 | 4.966667 |

| ++5nv+2nsvrWM7dOT+ZiWJ5uTZOzQS0NEvqu3jidTjU= | 90 | 297 | 3.300000 | 0 | 0.000000 | 41 | 30 | 99 | 99 | 1 | 20170201 | 20170301 | 0 | 3.300000 |

| ++7IULiyKbNc8jllqhRuyKZjX1J4mPF4tsudFCJfv4k= | 513 | 2682 | 5.228070 | 0 | 0.000000 | 37 | 30 | 149 | 149 | 1 | 20170201 | 20170301 | 0 | 4.966667 |

| ++Ck01c3EF07Ejek2jfXlKut+sEfg+0ry+A5uWeL9vY= | 270 | 891 | 3.300000 | 0 | 0.000000 | 41 | 30 | 99 | 99 | 1 | 20170214 | 20170314 | 0 | 3.300000 |

| ++FPL1dXZBXC3Cf6gE0HQiIHg1Pd+DBdK7w52xcUmX0= | 457 | 2235 | 4.890591 | 23 | 4.966667 | 41 | 30 | 149 | 149 | 1 | 20160225 | 20160225 | 1 | 4.966667 |

6. Joining Features and Data Manipulation

Joining Features

Having completed features by user from the User Logs and Transactions tables, we will now join the features from these tables together with the Members and Labels (a.k.a., train) tables into a single data frame for predictive modeling.

First, we'll join the Members and Labels together:

#Join members and labels files

df_fa = None

df_fa = members.join(labels, how='inner')

df_fa.head()

| city | bd | gender | registered_via | registration_init_time | is_churn | |

|---|---|---|---|---|---|---|

| msno | ||||||

| mKfgXQAmVeSKzN4rXW37qz0HbGCuYBspTBM3ONXZudg= | 1 | 0 | NaN | 13 | 20170120 | 0 |

| AFcKYsrudzim8OFa+fL/c9g5gZabAbhaJnoM0qmlJfo= | 1 | 0 | NaN | 13 | 20160907 | 0 |

| qk4mEZUYZq+4sQE7bzRYKc5Pvj+Xc7Wmu25DrCzltEU= | 1 | 0 | NaN | 13 | 20160902 | 0 |

| G2UGNLph2J6euGmZ7WIa1+Kc+dPZBJI0HbLPu5YtrZw= | 1 | 0 | NaN | 13 | 20161028 | 0 |

| EqSHZpMj5uddJvv2gXcHvuOKFOdS5NN6RalHfzEhhaI= | 1 | 0 | NaN | 13 | 20161004 | 0 |

Next, we join the User Logs features table with the combined Members and the Labels table:

df_fa = df_fa.join(user_logs_features, how='inner')

#Note, the warning is okay, and actually helps us by flattening our column headers.

df_fa.head()

C:\Users\AOlson\AppData\Local\Continuum\anaconda3\lib\site-packages\pandas\core\reshape\merge.py:558: UserWarning: merging between different levels can give an unintended result (1 levels on the left, 3 on the right)

warnings.warn(msg, UserWarning)

| city | bd | gender | registered_via | registration_init_time | is_churn | (date_features, date, max_date) | (date_features, date, min_date) | (date_features, date, listening_tenure) | (within_days_1, num_unq, sum) | (within_days_1, num_unq, mean) | (within_days_1, num_unq, count) | (within_days_1, total_secs, sum) | (within_days_1, total_secs, mean) | (within_days_1, num_25, sum) | (within_days_1, num_25, mean) | (within_days_1, num_50, sum) | (within_days_1, num_50, mean) | (within_days_1, num_75, sum) | (within_days_1, num_75, mean) | (within_days_1, num_985, sum) | (within_days_1, num_985, mean) | (within_days_1, num_100, sum) | (within_days_1, num_100, mean) | (within_days_7, num_unq, sum) | (within_days_7, num_unq, mean) | (within_days_7, num_unq, count) | (within_days_7, total_secs, sum) | (within_days_7, total_secs, mean) | (within_days_7, num_25, sum) | (within_days_7, num_25, mean) | (within_days_7, num_50, sum) | (within_days_7, num_50, mean) | (within_days_7, num_75, sum) | (within_days_7, num_75, mean) | (within_days_7, num_985, sum) | (within_days_7, num_985, mean) | (within_days_7, num_100, sum) | (within_days_7, num_100, mean) | (within_days_14, num_unq, sum) | (within_days_14, num_unq, mean) | (within_days_14, num_unq, count) | (within_days_14, total_secs, sum) | (within_days_14, total_secs, mean) | (within_days_14, num_25, sum) | (within_days_14, num_25, mean) | (within_days_14, num_50, sum) | (within_days_14, num_50, mean) | (within_days_14, num_75, sum) | (within_days_14, num_75, mean) | (within_days_14, num_985, sum) | (within_days_14, num_985, mean) | (within_days_14, num_100, sum) | (within_days_14, num_100, mean) | (within_days_31, num_unq, sum) | (within_days_31, num_unq, mean) | (within_days_31, num_unq, count) | (within_days_31, total_secs, sum) | (within_days_31, total_secs, mean) | (within_days_31, num_25, sum) | (within_days_31, num_25, mean) | (within_days_31, num_50, sum) | (within_days_31, num_50, mean) | (within_days_31, num_75, sum) | (within_days_31, num_75, mean) | (within_days_31, num_985, sum) | (within_days_31, num_985, mean) | (within_days_31, num_100, sum) | (within_days_31, num_100, mean) | (within_days_90, num_unq, sum) | (within_days_90, num_unq, mean) | (within_days_90, num_unq, count) | (within_days_90, total_secs, sum) | (within_days_90, total_secs, mean) | (within_days_90, num_25, sum) | (within_days_90, num_25, mean) | (within_days_90, num_50, sum) | (within_days_90, num_50, mean) | (within_days_90, num_75, sum) | (within_days_90, num_75, mean) | (within_days_90, num_985, sum) | (within_days_90, num_985, mean) | (within_days_90, num_100, sum) | (within_days_90, num_100, mean) | (within_days_180, num_unq, sum) | (within_days_180, num_unq, mean) | (within_days_180, num_unq, count) | (within_days_180, total_secs, sum) | (within_days_180, total_secs, mean) | (within_days_180, num_25, sum) | (within_days_180, num_25, mean) | (within_days_180, num_50, sum) | (within_days_180, num_50, mean) | (within_days_180, num_75, sum) | (within_days_180, num_75, mean) | (within_days_180, num_985, sum) | (within_days_180, num_985, mean) | (within_days_180, num_100, sum) | (within_days_180, num_100, mean) | (within_days_365, num_unq, sum) | (within_days_365, num_unq, mean) | (within_days_365, num_unq, count) | (within_days_365, total_secs, sum) | (within_days_365, total_secs, mean) | (within_days_365, num_25, sum) | (within_days_365, num_25, mean) | (within_days_365, num_50, sum) | (within_days_365, num_50, mean) | (within_days_365, num_75, sum) | (within_days_365, num_75, mean) | (within_days_365, num_985, sum) | (within_days_365, num_985, mean) | (within_days_365, num_100, sum) | (within_days_365, num_100, mean) | (within_days_9999, num_unq, sum) | (within_days_9999, num_unq, mean) | (within_days_9999, num_unq, count) | (within_days_9999, total_secs, sum) | (within_days_9999, total_secs, mean) | (within_days_9999, num_25, sum) | (within_days_9999, num_25, mean) | (within_days_9999, num_50, sum) | (within_days_9999, num_50, mean) | (within_days_9999, num_75, sum) | (within_days_9999, num_75, mean) | (within_days_9999, num_985, sum) | (within_days_9999, num_985, mean) | (within_days_9999, num_100, sum) | (within_days_9999, num_100, mean) | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| msno | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mKfgXQAmVeSKzN4rXW37qz0HbGCuYBspTBM3ONXZudg= | 1 | 0 | NaN | 13 | 20170120 | 0 | 2017-02-24 | 2017-01-20 | 35 | 1 | 1 | 1 | 10.068 | 10.068 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1.000000 | 1 | 10.068 | 10.068000 | 2 | 2.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 1 | 1.000000 | 1 | 10.068 | 10.068000 | 2 | 2.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 27 | 6.750000 | 4 | 3158.450 | 789.612500 | 33 | 8.250000 | 1 | 0.250000 | 1 | 0.250000 | 1 | 0.250000 | 9 | 2.250000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 |

| AFcKYsrudzim8OFa+fL/c9g5gZabAbhaJnoM0qmlJfo= | 1 | 0 | NaN | 13 | 20160907 | 0 | 2017-02-27 | 2016-09-07 | 173 | 21 | 21 | 1 | 2633.631 | 2633.631 | 13 | 13 | 3 | 3 | 1 | 1 | 1 | 1 | 8 | 8 | 228 | 32.571429 | 7 | 32731.138 | 4675.876857 | 140 | 20.000000 | 29 | 4.142857 | 14 | 2.000000 | 20 | 2.857143 | 95 | 13.571429 | 512 | 36.571429 | 14 | 98422.408 | 7030.172000 | 301 | 21.500000 | 71 | 5.071429 | 34 | 2.428571 | 42 | 3.000000 | 305 | 21.785714 | 1044 | 36.000000 | 29 | 178909.861 | 6169.305552 | 656 | 22.620690 | 135 | 4.655172 | 61 | 2.103448 | 74 | 2.551724 | 571 | 19.689655 | 4298 | 58.876712 | 73 | 632743.845 | 8667.723904 | 2717 | 37.219178 | 393 | 5.383562 | 188 | 2.575342 | 189 | 2.589041 | 2094 | 28.684932 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 |

| qk4mEZUYZq+4sQE7bzRYKc5Pvj+Xc7Wmu25DrCzltEU= | 1 | 0 | NaN | 13 | 20160902 | 0 | 2017-02-26 | 2016-09-02 | 177 | 1 | 1 | 1 | 271.093 | 271.093 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 243 | 34.714286 | 7 | 60581.740 | 8654.534286 | 3 | 0.428571 | 1 | 0.142857 | 2 | 0.285714 | 1 | 0.142857 | 238 | 34.000000 | 489 | 34.928571 | 14 | 122772.792 | 8769.485143 | 32 | 2.285714 | 2 | 0.142857 | 5 | 0.357143 | 11 | 0.785714 | 476 | 34.000000 | 899 | 32.107143 | 28 | 231622.820 | 8272.243571 | 73 | 2.607143 | 7 | 0.250000 | 11 | 0.392857 | 14 | 0.500000 | 893 | 31.892857 | 2396 | 35.235294 | 68 | 770040.608 | 11324.126588 | 180 | 2.647059 | 32 | 0.470588 | 32 | 0.470588 | 33 | 0.485294 | 2953 | 43.426471 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 |

| G2UGNLph2J6euGmZ7WIa1+Kc+dPZBJI0HbLPu5YtrZw= | 1 | 0 | NaN | 13 | 20161028 | 0 | 2017-02-28 | 2016-10-28 | 123 | 17 | 17 | 1 | 1626.704 | 1626.704 | 15 | 15 | 1 | 1 | 1 | 1 | 0 | 0 | 6 | 6 | 121 | 24.200000 | 5 | 30054.147 | 6010.829400 | 29 | 5.800000 | 10 | 2.000000 | 5 | 1.000000 | 2 | 0.400000 | 123 | 24.600000 | 192 | 17.454545 | 11 | 43518.795 | 3956.254091 | 44 | 4.000000 | 11 | 1.000000 | 7 | 0.636364 | 7 | 0.636364 | 174 | 15.818182 | 457 | 17.576923 | 26 | 111841.140 | 4301.582308 | 80 | 3.076923 | 23 | 0.884615 | 15 | 0.576923 | 15 | 0.576923 | 456 | 17.538462 | 1229 | 21.189655 | 58 | 287422.839 | 4955.566190 | 203 | 3.500000 | 81 | 1.396552 | 55 | 0.948276 | 60 | 1.034483 | 1145 | 19.741379 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 |

| EqSHZpMj5uddJvv2gXcHvuOKFOdS5NN6RalHfzEhhaI= | 1 | 0 | NaN | 13 | 20161004 | 0 | 2016-10-26 | 2016-10-04 | 22 | 1 | 1 | 1 | 156.204 | 156.204 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 14 | 7.000000 | 2 | 2399.824 | 1199.912000 | 4 | 2.000000 | 5 | 2.500000 | 4 | 2.000000 | 0 | 0.000000 | 5 | 2.500000 | 17 | 4.250000 | 4 | 2630.818 | 657.704500 | 5 | 1.250000 | 7 | 1.750000 | 4 | 1.000000 | 0 | 0.000000 | 5 | 1.250000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 |

Finally, we'll join in our Transaction features:

# Joining feature DF's

df_fa = df_fa.join(transactions_features, how='inner')

print('%d Entries in the DF: ' %(len(df_fa)))

print('%d Features' %(len(df_fa.columns)))

df_fa.head()

88544 Entries in the DF:

143 Features

| city | bd | gender | registered_via | registration_init_time | is_churn | (date_features, date, max_date) | (date_features, date, min_date) | (date_features, date, listening_tenure) | (within_days_1, num_unq, sum) | (within_days_1, num_unq, mean) | (within_days_1, num_unq, count) | (within_days_1, total_secs, sum) | (within_days_1, total_secs, mean) | (within_days_1, num_25, sum) | (within_days_1, num_25, mean) | (within_days_1, num_50, sum) | (within_days_1, num_50, mean) | (within_days_1, num_75, sum) | (within_days_1, num_75, mean) | (within_days_1, num_985, sum) | (within_days_1, num_985, mean) | (within_days_1, num_100, sum) | (within_days_1, num_100, mean) | (within_days_7, num_unq, sum) | (within_days_7, num_unq, mean) | (within_days_7, num_unq, count) | (within_days_7, total_secs, sum) | (within_days_7, total_secs, mean) | (within_days_7, num_25, sum) | (within_days_7, num_25, mean) | (within_days_7, num_50, sum) | (within_days_7, num_50, mean) | (within_days_7, num_75, sum) | (within_days_7, num_75, mean) | (within_days_7, num_985, sum) | (within_days_7, num_985, mean) | (within_days_7, num_100, sum) | (within_days_7, num_100, mean) | (within_days_14, num_unq, sum) | (within_days_14, num_unq, mean) | (within_days_14, num_unq, count) | (within_days_14, total_secs, sum) | (within_days_14, total_secs, mean) | (within_days_14, num_25, sum) | (within_days_14, num_25, mean) | (within_days_14, num_50, sum) | (within_days_14, num_50, mean) | (within_days_14, num_75, sum) | (within_days_14, num_75, mean) | (within_days_14, num_985, sum) | (within_days_14, num_985, mean) | (within_days_14, num_100, sum) | (within_days_14, num_100, mean) | (within_days_31, num_unq, sum) | (within_days_31, num_unq, mean) | (within_days_31, num_unq, count) | (within_days_31, total_secs, sum) | (within_days_31, total_secs, mean) | (within_days_31, num_25, sum) | (within_days_31, num_25, mean) | (within_days_31, num_50, sum) | (within_days_31, num_50, mean) | (within_days_31, num_75, sum) | (within_days_31, num_75, mean) | (within_days_31, num_985, sum) | (within_days_31, num_985, mean) | (within_days_31, num_100, sum) | (within_days_31, num_100, mean) | (within_days_90, num_unq, sum) | (within_days_90, num_unq, mean) | (within_days_90, num_unq, count) | (within_days_90, total_secs, sum) | (within_days_90, total_secs, mean) | (within_days_90, num_25, sum) | (within_days_90, num_25, mean) | (within_days_90, num_50, sum) | (within_days_90, num_50, mean) | (within_days_90, num_75, sum) | (within_days_90, num_75, mean) | (within_days_90, num_985, sum) | (within_days_90, num_985, mean) | (within_days_90, num_100, sum) | (within_days_90, num_100, mean) | (within_days_180, num_unq, sum) | (within_days_180, num_unq, mean) | (within_days_180, num_unq, count) | (within_days_180, total_secs, sum) | (within_days_180, total_secs, mean) | (within_days_180, num_25, sum) | (within_days_180, num_25, mean) | (within_days_180, num_50, sum) | (within_days_180, num_50, mean) | (within_days_180, num_75, sum) | (within_days_180, num_75, mean) | (within_days_180, num_985, sum) | (within_days_180, num_985, mean) | (within_days_180, num_100, sum) | (within_days_180, num_100, mean) | (within_days_365, num_unq, sum) | (within_days_365, num_unq, mean) | (within_days_365, num_unq, count) | (within_days_365, total_secs, sum) | (within_days_365, total_secs, mean) | (within_days_365, num_25, sum) | (within_days_365, num_25, mean) | (within_days_365, num_50, sum) | (within_days_365, num_50, mean) | (within_days_365, num_75, sum) | (within_days_365, num_75, mean) | (within_days_365, num_985, sum) | (within_days_365, num_985, mean) | (within_days_365, num_100, sum) | (within_days_365, num_100, mean) | (within_days_9999, num_unq, sum) | (within_days_9999, num_unq, mean) | (within_days_9999, num_unq, count) | (within_days_9999, total_secs, sum) | (within_days_9999, total_secs, mean) | (within_days_9999, num_25, sum) | (within_days_9999, num_25, mean) | (within_days_9999, num_50, sum) | (within_days_9999, num_50, mean) | (within_days_9999, num_75, sum) | (within_days_9999, num_75, mean) | (within_days_9999, num_985, sum) | (within_days_9999, num_985, mean) | (within_days_9999, num_100, sum) | (within_days_9999, num_100, mean) | total_plan_days | total_amount_paid | amount_paid_per_day | diff_renewal_duration | diff_plan_amount_paid_per_day | latest_payment_method_id | latest_plan_days | latest_plan_list_price | latest_amount_paid | latest_auto_renew | latest_transaction_date | latest_expire_date | latest_is_cancel | latest_amount_paid_per_day | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| msno | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mKfgXQAmVeSKzN4rXW37qz0HbGCuYBspTBM3ONXZudg= | 1 | 0 | NaN | 13 | 20170120 | 0 | 2017-02-24 | 2017-01-20 | 35 | 1 | 1 | 1 | 10.068 | 10.068 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1.000000 | 1 | 10.068 | 10.068000 | 2 | 2.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 1 | 1.000000 | 1 | 10.068 | 10.068000 | 2 | 2.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 27 | 6.750000 | 4 | 3158.450 | 789.612500 | 33 | 8.250000 | 1 | 0.250000 | 1 | 0.250000 | 1 | 0.250000 | 9 | 2.250000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 60 | 258 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170220 | 20170319 | 0 | 4.300000 |

| AFcKYsrudzim8OFa+fL/c9g5gZabAbhaJnoM0qmlJfo= | 1 | 0 | NaN | 13 | 20160907 | 0 | 2017-02-27 | 2016-09-07 | 173 | 21 | 21 | 1 | 2633.631 | 2633.631 | 13 | 13 | 3 | 3 | 1 | 1 | 1 | 1 | 8 | 8 | 228 | 32.571429 | 7 | 32731.138 | 4675.876857 | 140 | 20.000000 | 29 | 4.142857 | 14 | 2.000000 | 20 | 2.857143 | 95 | 13.571429 | 512 | 36.571429 | 14 | 98422.408 | 7030.172000 | 301 | 21.500000 | 71 | 5.071429 | 34 | 2.428571 | 42 | 3.000000 | 305 | 21.785714 | 1044 | 36.000000 | 29 | 178909.861 | 6169.305552 | 656 | 22.620690 | 135 | 4.655172 | 61 | 2.103448 | 74 | 2.551724 | 571 | 19.689655 | 4298 | 58.876712 | 73 | 632743.845 | 8667.723904 | 2717 | 37.219178 | 393 | 5.383562 | 188 | 2.575342 | 189 | 2.589041 | 2094 | 28.684932 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 180 | 774 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170207 | 20170306 | 0 | 4.300000 |

| qk4mEZUYZq+4sQE7bzRYKc5Pvj+Xc7Wmu25DrCzltEU= | 1 | 0 | NaN | 13 | 20160902 | 0 | 2017-02-26 | 2016-09-02 | 177 | 1 | 1 | 1 | 271.093 | 271.093 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 243 | 34.714286 | 7 | 60581.740 | 8654.534286 | 3 | 0.428571 | 1 | 0.142857 | 2 | 0.285714 | 1 | 0.142857 | 238 | 34.000000 | 489 | 34.928571 | 14 | 122772.792 | 8769.485143 | 32 | 2.285714 | 2 | 0.142857 | 5 | 0.357143 | 11 | 0.785714 | 476 | 34.000000 | 899 | 32.107143 | 28 | 231622.820 | 8272.243571 | 73 | 2.607143 | 7 | 0.250000 | 11 | 0.392857 | 14 | 0.500000 | 893 | 31.892857 | 2396 | 35.235294 | 68 | 770040.608 | 11324.126588 | 180 | 2.647059 | 32 | 0.470588 | 32 | 0.470588 | 33 | 0.485294 | 2953 | 43.426471 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 180 | 774 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170202 | 20170301 | 0 | 4.300000 |

| G2UGNLph2J6euGmZ7WIa1+Kc+dPZBJI0HbLPu5YtrZw= | 1 | 0 | NaN | 13 | 20161028 | 0 | 2017-02-28 | 2016-10-28 | 123 | 17 | 17 | 1 | 1626.704 | 1626.704 | 15 | 15 | 1 | 1 | 1 | 1 | 0 | 0 | 6 | 6 | 121 | 24.200000 | 5 | 30054.147 | 6010.829400 | 29 | 5.800000 | 10 | 2.000000 | 5 | 1.000000 | 2 | 0.400000 | 123 | 24.600000 | 192 | 17.454545 | 11 | 43518.795 | 3956.254091 | 44 | 4.000000 | 11 | 1.000000 | 7 | 0.636364 | 7 | 0.636364 | 174 | 15.818182 | 457 | 17.576923 | 26 | 111841.140 | 4301.582308 | 80 | 3.076923 | 23 | 0.884615 | 15 | 0.576923 | 15 | 0.576923 | 456 | 17.538462 | 1229 | 21.189655 | 58 | 287422.839 | 4955.566190 | 203 | 3.500000 | 81 | 1.396552 | 55 | 0.948276 | 60 | 1.034483 | 1145 | 19.741379 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 150 | 596 | 3.973333 | 0 | 0.0 | 30 | 30 | 149 | 149 | 1 | 20170228 | 20170327 | 0 | 4.966667 |

| EqSHZpMj5uddJvv2gXcHvuOKFOdS5NN6RalHfzEhhaI= | 1 | 0 | NaN | 13 | 20161004 | 0 | 2016-10-26 | 2016-10-04 | 22 | 1 | 1 | 1 | 156.204 | 156.204 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 14 | 7.000000 | 2 | 2399.824 | 1199.912000 | 4 | 2.000000 | 5 | 2.500000 | 4 | 2.000000 | 0 | 0.000000 | 5 | 2.500000 | 17 | 4.250000 | 4 | 2630.818 | 657.704500 | 5 | 1.250000 | 7 | 1.750000 | 4 | 1.000000 | 0 | 0.000000 | 5 | 1.250000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 150 | 645 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170204 | 20170303 | 0 | 4.300000 |

Data Manipulation

Having joined all the features into a single file, we will now perform some data manipulation tasks to prepare the table for predictive modeling.

First, we will fix the column headers:

#Fix column headers

df_fa.columns = df_fa.columns.map(''.join)

df_fa.head()

| city | bd | gender | registered_via | registration_init_time | is_churn | date_featuresdatemax_date | date_featuresdatemin_date | date_featuresdatelistening_tenure | within_days_1num_unqsum | within_days_1num_unqmean | within_days_1num_unqcount | within_days_1total_secssum | within_days_1total_secsmean | within_days_1num_25sum | within_days_1num_25mean | within_days_1num_50sum | within_days_1num_50mean | within_days_1num_75sum | within_days_1num_75mean | within_days_1num_985sum | within_days_1num_985mean | within_days_1num_100sum | within_days_1num_100mean | within_days_7num_unqsum | within_days_7num_unqmean | within_days_7num_unqcount | within_days_7total_secssum | within_days_7total_secsmean | within_days_7num_25sum | within_days_7num_25mean | within_days_7num_50sum | within_days_7num_50mean | within_days_7num_75sum | within_days_7num_75mean | within_days_7num_985sum | within_days_7num_985mean | within_days_7num_100sum | within_days_7num_100mean | within_days_14num_unqsum | within_days_14num_unqmean | within_days_14num_unqcount | within_days_14total_secssum | within_days_14total_secsmean | within_days_14num_25sum | within_days_14num_25mean | within_days_14num_50sum | within_days_14num_50mean | within_days_14num_75sum | within_days_14num_75mean | within_days_14num_985sum | within_days_14num_985mean | within_days_14num_100sum | within_days_14num_100mean | within_days_31num_unqsum | within_days_31num_unqmean | within_days_31num_unqcount | within_days_31total_secssum | within_days_31total_secsmean | within_days_31num_25sum | within_days_31num_25mean | within_days_31num_50sum | within_days_31num_50mean | within_days_31num_75sum | within_days_31num_75mean | within_days_31num_985sum | within_days_31num_985mean | within_days_31num_100sum | within_days_31num_100mean | within_days_90num_unqsum | within_days_90num_unqmean | within_days_90num_unqcount | within_days_90total_secssum | within_days_90total_secsmean | within_days_90num_25sum | within_days_90num_25mean | within_days_90num_50sum | within_days_90num_50mean | within_days_90num_75sum | within_days_90num_75mean | within_days_90num_985sum | within_days_90num_985mean | within_days_90num_100sum | within_days_90num_100mean | within_days_180num_unqsum | within_days_180num_unqmean | within_days_180num_unqcount | within_days_180total_secssum | within_days_180total_secsmean | within_days_180num_25sum | within_days_180num_25mean | within_days_180num_50sum | within_days_180num_50mean | within_days_180num_75sum | within_days_180num_75mean | within_days_180num_985sum | within_days_180num_985mean | within_days_180num_100sum | within_days_180num_100mean | within_days_365num_unqsum | within_days_365num_unqmean | within_days_365num_unqcount | within_days_365total_secssum | within_days_365total_secsmean | within_days_365num_25sum | within_days_365num_25mean | within_days_365num_50sum | within_days_365num_50mean | within_days_365num_75sum | within_days_365num_75mean | within_days_365num_985sum | within_days_365num_985mean | within_days_365num_100sum | within_days_365num_100mean | within_days_9999num_unqsum | within_days_9999num_unqmean | within_days_9999num_unqcount | within_days_9999total_secssum | within_days_9999total_secsmean | within_days_9999num_25sum | within_days_9999num_25mean | within_days_9999num_50sum | within_days_9999num_50mean | within_days_9999num_75sum | within_days_9999num_75mean | within_days_9999num_985sum | within_days_9999num_985mean | within_days_9999num_100sum | within_days_9999num_100mean | total_plan_days | total_amount_paid | amount_paid_per_day | diff_renewal_duration | diff_plan_amount_paid_per_day | latest_payment_method_id | latest_plan_days | latest_plan_list_price | latest_amount_paid | latest_auto_renew | latest_transaction_date | latest_expire_date | latest_is_cancel | latest_amount_paid_per_day | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| msno | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| mKfgXQAmVeSKzN4rXW37qz0HbGCuYBspTBM3ONXZudg= | 1 | 0 | NaN | 13 | 20170120 | 0 | 2017-02-24 | 2017-01-20 | 35 | 1 | 1 | 1 | 10.068 | 10.068 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1.000000 | 1 | 10.068 | 10.068000 | 2 | 2.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 1 | 1.000000 | 1 | 10.068 | 10.068000 | 2 | 2.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 0 | 0.000000 | 27 | 6.750000 | 4 | 3158.450 | 789.612500 | 33 | 8.250000 | 1 | 0.250000 | 1 | 0.250000 | 1 | 0.250000 | 9 | 2.250000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 29 | 4.833333 | 6 | 3245.638 | 540.939667 | 41 | 6.833333 | 1 | 0.166667 | 1 | 0.166667 | 1 | 0.166667 | 9 | 1.500000 | 60 | 258 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170220 | 20170319 | 0 | 4.300000 |

| AFcKYsrudzim8OFa+fL/c9g5gZabAbhaJnoM0qmlJfo= | 1 | 0 | NaN | 13 | 20160907 | 0 | 2017-02-27 | 2016-09-07 | 173 | 21 | 21 | 1 | 2633.631 | 2633.631 | 13 | 13 | 3 | 3 | 1 | 1 | 1 | 1 | 8 | 8 | 228 | 32.571429 | 7 | 32731.138 | 4675.876857 | 140 | 20.000000 | 29 | 4.142857 | 14 | 2.000000 | 20 | 2.857143 | 95 | 13.571429 | 512 | 36.571429 | 14 | 98422.408 | 7030.172000 | 301 | 21.500000 | 71 | 5.071429 | 34 | 2.428571 | 42 | 3.000000 | 305 | 21.785714 | 1044 | 36.000000 | 29 | 178909.861 | 6169.305552 | 656 | 22.620690 | 135 | 4.655172 | 61 | 2.103448 | 74 | 2.551724 | 571 | 19.689655 | 4298 | 58.876712 | 73 | 632743.845 | 8667.723904 | 2717 | 37.219178 | 393 | 5.383562 | 188 | 2.575342 | 189 | 2.589041 | 2094 | 28.684932 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 9218 | 59.857143 | 154 | 1232770.399 | 8005.002591 | 5289 | 34.344156 | 838 | 5.441558 | 410 | 2.662338 | 323 | 2.097403 | 4204 | 27.298701 | 180 | 774 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170207 | 20170306 | 0 | 4.300000 |

| qk4mEZUYZq+4sQE7bzRYKc5Pvj+Xc7Wmu25DrCzltEU= | 1 | 0 | NaN | 13 | 20160902 | 0 | 2017-02-26 | 2016-09-02 | 177 | 1 | 1 | 1 | 271.093 | 271.093 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 243 | 34.714286 | 7 | 60581.740 | 8654.534286 | 3 | 0.428571 | 1 | 0.142857 | 2 | 0.285714 | 1 | 0.142857 | 238 | 34.000000 | 489 | 34.928571 | 14 | 122772.792 | 8769.485143 | 32 | 2.285714 | 2 | 0.142857 | 5 | 0.357143 | 11 | 0.785714 | 476 | 34.000000 | 899 | 32.107143 | 28 | 231622.820 | 8272.243571 | 73 | 2.607143 | 7 | 0.250000 | 11 | 0.392857 | 14 | 0.500000 | 893 | 31.892857 | 2396 | 35.235294 | 68 | 770040.608 | 11324.126588 | 180 | 2.647059 | 32 | 0.470588 | 32 | 0.470588 | 33 | 0.485294 | 2953 | 43.426471 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 3580 | 32.844037 | 109 | 1137009.556 | 10431.280330 | 423 | 3.880734 | 72 | 0.660550 | 58 | 0.532110 | 58 | 0.532110 | 4308 | 39.522936 | 180 | 774 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170202 | 20170301 | 0 | 4.300000 |

| G2UGNLph2J6euGmZ7WIa1+Kc+dPZBJI0HbLPu5YtrZw= | 1 | 0 | NaN | 13 | 20161028 | 0 | 2017-02-28 | 2016-10-28 | 123 | 17 | 17 | 1 | 1626.704 | 1626.704 | 15 | 15 | 1 | 1 | 1 | 1 | 0 | 0 | 6 | 6 | 121 | 24.200000 | 5 | 30054.147 | 6010.829400 | 29 | 5.800000 | 10 | 2.000000 | 5 | 1.000000 | 2 | 0.400000 | 123 | 24.600000 | 192 | 17.454545 | 11 | 43518.795 | 3956.254091 | 44 | 4.000000 | 11 | 1.000000 | 7 | 0.636364 | 7 | 0.636364 | 174 | 15.818182 | 457 | 17.576923 | 26 | 111841.140 | 4301.582308 | 80 | 3.076923 | 23 | 0.884615 | 15 | 0.576923 | 15 | 0.576923 | 456 | 17.538462 | 1229 | 21.189655 | 58 | 287422.839 | 4955.566190 | 203 | 3.500000 | 81 | 1.396552 | 55 | 0.948276 | 60 | 1.034483 | 1145 | 19.741379 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 1441 | 20.884058 | 69 | 326268.069 | 4728.522739 | 247 | 3.579710 | 115 | 1.666667 | 74 | 1.072464 | 76 | 1.101449 | 1272 | 18.434783 | 150 | 596 | 3.973333 | 0 | 0.0 | 30 | 30 | 149 | 149 | 1 | 20170228 | 20170327 | 0 | 4.966667 |

| EqSHZpMj5uddJvv2gXcHvuOKFOdS5NN6RalHfzEhhaI= | 1 | 0 | NaN | 13 | 20161004 | 0 | 2016-10-26 | 2016-10-04 | 22 | 1 | 1 | 1 | 156.204 | 156.204 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 14 | 7.000000 | 2 | 2399.824 | 1199.912000 | 4 | 2.000000 | 5 | 2.500000 | 4 | 2.000000 | 0 | 0.000000 | 5 | 2.500000 | 17 | 4.250000 | 4 | 2630.818 | 657.704500 | 5 | 1.250000 | 7 | 1.750000 | 4 | 1.000000 | 0 | 0.000000 | 5 | 1.250000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 136 | 13.600000 | 10 | 15562.900 | 1556.290000 | 76 | 7.600000 | 36 | 3.600000 | 22 | 2.200000 | 7 | 0.700000 | 21 | 2.100000 | 150 | 645 | 4.300000 | 0 | 0.0 | 30 | 30 | 129 | 129 | 1 | 20170204 | 20170303 | 0 | 4.300000 |

Next, we will change infinite and 'na' values to -9999, wildly different than other values in the range, so that our algorithms see them as 'different'.

#Handle bad values

df_fa['amount_paid_per_day'].replace([np.inf, -np.inf], -9999, inplace=True)

df_fa['latest_amount_paid_per_day'].replace([np.inf, -np.inf], -9999, inplace=True)

df_fa['diff_plan_amount_paid_per_day'].replace([np.inf, -np.inf], -9999, inplace=True)

df_fa['diff_plan_amount_paid_per_day'].fillna(-9999, inplace=True)

df_fa.isnull().any()

city False

bd False

gender True

registered_via False

registration_init_time False

is_churn False

date_featuresdatemax_date False

date_featuresdatemin_date False

date_featuresdatelistening_tenure False

within_days_1num_unqsum False

within_days_1num_unqmean False

within_days_1num_unqcount False

within_days_1total_secssum False

within_days_1total_secsmean False

within_days_1num_25sum False

within_days_1num_25mean False

within_days_1num_50sum False

within_days_1num_50mean False

within_days_1num_75sum False

within_days_1num_75mean False

within_days_1num_985sum False

within_days_1num_985mean False

within_days_1num_100sum False

within_days_1num_100mean False

within_days_7num_unqsum False

within_days_7num_unqmean False

within_days_7num_unqcount False

within_days_7total_secssum False

within_days_7total_secsmean False

within_days_7num_25sum False

within_days_7num_25mean False

within_days_7num_50sum False

within_days_7num_50mean False

within_days_7num_75sum False

within_days_7num_75mean False

within_days_7num_985sum False

within_days_7num_985mean False

within_days_7num_100sum False

within_days_7num_100mean False

within_days_14num_unqsum False

within_days_14num_unqmean False

within_days_14num_unqcount False

within_days_14total_secssum False

within_days_14total_secsmean False

within_days_14num_25sum False

within_days_14num_25mean False

within_days_14num_50sum False

within_days_14num_50mean False

within_days_14num_75sum False

within_days_14num_75mean False

within_days_14num_985sum False

within_days_14num_985mean False

within_days_14num_100sum False

within_days_14num_100mean False

within_days_31num_unqsum False

within_days_31num_unqmean False

within_days_31num_unqcount False

within_days_31total_secssum False

within_days_31total_secsmean False

within_days_31num_25sum False

within_days_31num_25mean False

within_days_31num_50sum False

within_days_31num_50mean False

within_days_31num_75sum False

within_days_31num_75mean False

within_days_31num_985sum False

within_days_31num_985mean False

within_days_31num_100sum False

within_days_31num_100mean False

within_days_90num_unqsum False

within_days_90num_unqmean False

within_days_90num_unqcount False

within_days_90total_secssum False

within_days_90total_secsmean False

within_days_90num_25sum False

within_days_90num_25mean False

within_days_90num_50sum False

within_days_90num_50mean False

within_days_90num_75sum False

within_days_90num_75mean False

within_days_90num_985sum False

within_days_90num_985mean False

within_days_90num_100sum False

within_days_90num_100mean False

within_days_180num_unqsum False

within_days_180num_unqmean False

within_days_180num_unqcount False

within_days_180total_secssum False

within_days_180total_secsmean False

within_days_180num_25sum False

within_days_180num_25mean False

within_days_180num_50sum False

within_days_180num_50mean False

within_days_180num_75sum False

within_days_180num_75mean False

within_days_180num_985sum False

within_days_180num_985mean False

within_days_180num_100sum False

within_days_180num_100mean False

within_days_365num_unqsum False

within_days_365num_unqmean False

within_days_365num_unqcount False

within_days_365total_secssum False

within_days_365total_secsmean False

within_days_365num_25sum False

within_days_365num_25mean False

within_days_365num_50sum False

within_days_365num_50mean False

within_days_365num_75sum False

within_days_365num_75mean False

within_days_365num_985sum False

within_days_365num_985mean False

within_days_365num_100sum False

within_days_365num_100mean False

within_days_9999num_unqsum False

within_days_9999num_unqmean False

within_days_9999num_unqcount False

within_days_9999total_secssum False

within_days_9999total_secsmean False

within_days_9999num_25sum False

within_days_9999num_25mean False

within_days_9999num_50sum False

within_days_9999num_50mean False

within_days_9999num_75sum False

within_days_9999num_75mean False

within_days_9999num_985sum False

within_days_9999num_985mean False

within_days_9999num_100sum False

within_days_9999num_100mean False

total_plan_days False

total_amount_paid False

amount_paid_per_day False

diff_renewal_duration False

diff_plan_amount_paid_per_day False

latest_payment_method_id False

latest_plan_days False

latest_plan_list_price False

latest_amount_paid False

latest_auto_renew False

latest_transaction_date False

latest_expire_date False

latest_is_cancel False

latest_amount_paid_per_day False

dtype: bool

The cell above uploads verifies we have no null values in our data.

Now let's inspect our data types:

df_fa.dtypes

city int64

bd int64

gender object

registered_via int64

registration_init_time int64

is_churn int64

date_featuresdatemax_date datetime64[ns]

date_featuresdatemin_date datetime64[ns]

date_featuresdatelistening_tenure int64

within_days_1num_unqsum int64

within_days_1num_unqmean int64

within_days_1num_unqcount int64

within_days_1total_secssum float64

within_days_1total_secsmean float64

within_days_1num_25sum int64

within_days_1num_25mean int64

within_days_1num_50sum int64

within_days_1num_50mean int64

within_days_1num_75sum int64

within_days_1num_75mean int64

within_days_1num_985sum int64

within_days_1num_985mean int64

within_days_1num_100sum int64

within_days_1num_100mean int64

within_days_7num_unqsum int64

within_days_7num_unqmean float64

within_days_7num_unqcount int64

within_days_7total_secssum float64

within_days_7total_secsmean float64

within_days_7num_25sum int64

within_days_7num_25mean float64

within_days_7num_50sum int64

within_days_7num_50mean float64

within_days_7num_75sum int64

within_days_7num_75mean float64

within_days_7num_985sum int64

within_days_7num_985mean float64

within_days_7num_100sum int64

within_days_7num_100mean float64

within_days_14num_unqsum int64

within_days_14num_unqmean float64

within_days_14num_unqcount int64

within_days_14total_secssum float64

within_days_14total_secsmean float64

within_days_14num_25sum int64

within_days_14num_25mean float64

within_days_14num_50sum int64

within_days_14num_50mean float64

within_days_14num_75sum int64

within_days_14num_75mean float64

within_days_14num_985sum int64

within_days_14num_985mean float64

within_days_14num_100sum int64

within_days_14num_100mean float64

within_days_31num_unqsum int64

within_days_31num_unqmean float64

within_days_31num_unqcount int64

within_days_31total_secssum float64

within_days_31total_secsmean float64

within_days_31num_25sum int64

within_days_31num_25mean float64

within_days_31num_50sum int64

within_days_31num_50mean float64

within_days_31num_75sum int64

within_days_31num_75mean float64

within_days_31num_985sum int64

within_days_31num_985mean float64

within_days_31num_100sum int64

within_days_31num_100mean float64

within_days_90num_unqsum int64

within_days_90num_unqmean float64

within_days_90num_unqcount int64

within_days_90total_secssum float64

within_days_90total_secsmean float64

within_days_90num_25sum int64

within_days_90num_25mean float64

within_days_90num_50sum int64

within_days_90num_50mean float64

within_days_90num_75sum int64

within_days_90num_75mean float64

within_days_90num_985sum int64

within_days_90num_985mean float64

within_days_90num_100sum int64

within_days_90num_100mean float64

within_days_180num_unqsum int64

within_days_180num_unqmean float64

within_days_180num_unqcount int64

within_days_180total_secssum float64

within_days_180total_secsmean float64

within_days_180num_25sum int64

within_days_180num_25mean float64

within_days_180num_50sum int64

within_days_180num_50mean float64

within_days_180num_75sum int64

within_days_180num_75mean float64

within_days_180num_985sum int64

within_days_180num_985mean float64

within_days_180num_100sum int64

within_days_180num_100mean float64

within_days_365num_unqsum int64

within_days_365num_unqmean float64

within_days_365num_unqcount int64

within_days_365total_secssum float64

within_days_365total_secsmean float64

within_days_365num_25sum int64

within_days_365num_25mean float64

within_days_365num_50sum int64

within_days_365num_50mean float64

within_days_365num_75sum int64

within_days_365num_75mean float64

within_days_365num_985sum int64

within_days_365num_985mean float64

within_days_365num_100sum int64

within_days_365num_100mean float64

within_days_9999num_unqsum int64

within_days_9999num_unqmean float64

within_days_9999num_unqcount int64

within_days_9999total_secssum float64

within_days_9999total_secsmean float64

within_days_9999num_25sum int64

within_days_9999num_25mean float64

within_days_9999num_50sum int64

within_days_9999num_50mean float64

within_days_9999num_75sum int64

within_days_9999num_75mean float64

within_days_9999num_985sum int64

within_days_9999num_985mean float64

within_days_9999num_100sum int64

within_days_9999num_100mean float64

total_plan_days int64

total_amount_paid int64

amount_paid_per_day float64

diff_renewal_duration int64

diff_plan_amount_paid_per_day float64

latest_payment_method_id int64

latest_plan_days int64

latest_plan_list_price int64

latest_amount_paid int64

latest_auto_renew int64

latest_transaction_date int64

latest_expire_date int64

latest_is_cancel int64

latest_amount_paid_per_day float64

dtype: object

We see we have a couple datetime objects in the file. We'll need to address these, as the ML algorithms don't like them. The code below breaks datetime formatted columns up into 4 separate columns.

def split_date_col(date_col_name):

"""Function that takes a column of datetime64[ns] items and converts it into 4 columns:

1) Year integer

2) Month integer

3) Day integer

4) Days since January 1, 2001, as an integer

It then deletes the original date

Args:

date_col_name (string): The column name, as a string.

"""

df_fa[date_col_name + '_year'] = df_fa[date_col_name].dt.year

df_fa[date_col_name + '_month'] = df_fa[date_col_name].dt.month

df_fa[date_col_name + '_day'] = df_fa[date_col_name].dt.day

df_fa[date_col_name + '_absday'] = ((df_fa[date_col_name] - pd.to_datetime('1/1/2000'))

.astype('timedelta64[D]')

.astype('int64')

)

df_fa.drop(date_col_name, axis=1, inplace=True)

#Only run this cell once, else it will fail on the date columns it deletes

split_date_col('date_featuresdatemax_date')

split_date_col('date_featuresdatemin_date')

Now let's re-check our cells:

df_fa.dtypes

city int64

bd int64

gender object

registered_via int64

registration_init_time int64

is_churn int64

date_featuresdatelistening_tenure int64

within_days_1num_unqsum int64

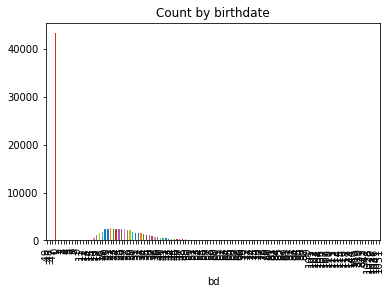

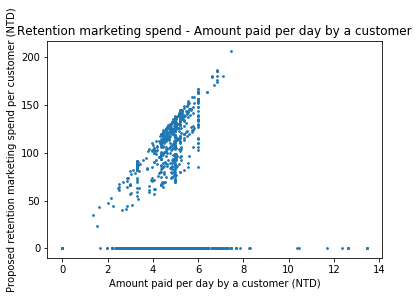

within_days_1num_unqmean int64